Sunday, August 31, 2014

Packrafts in the Parks

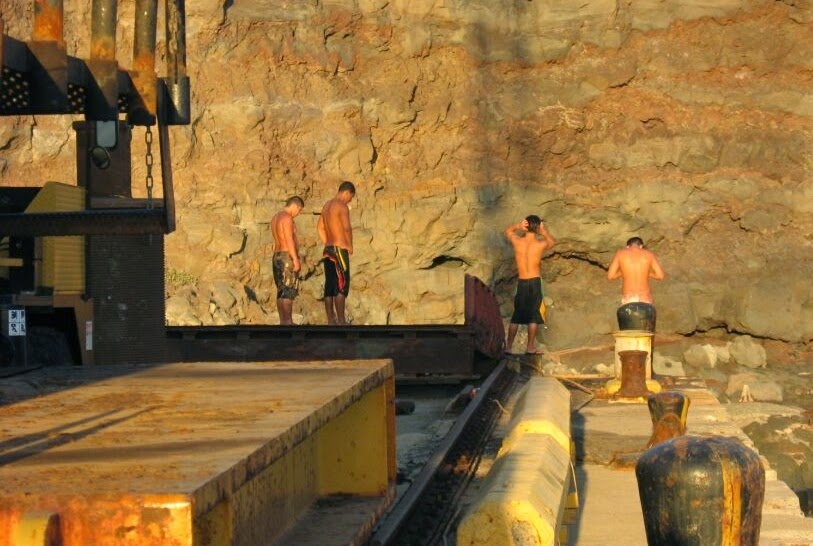

The morning sun was just breaking over Bonanza Ridge when a group of wilderness racers gathered last summer in the decommissioned copper mining town of Kennicott in Alaska’s Wrangell Mountains. The iconic red-and-white industrial buildings from nearly 100 years ago, now a national historic monument and undergoing restoration by the National Park Service, glowed in the light of the new day as Monte Montepare, co-owner of Kennicott Wilderness Guides, faced the crowd. Everyone had gathered for a one-of-a-kind race, a dash on foot up Bonanza, then down the backside to the upper reaches of McCarthy Creek.

McCarthy Creek parallels the spine of Bonanza Ridge for several miles until it curves like a fishhook around the base of Sourdough Peak.

McCarthy Creek parallels the spine of Bonanza Ridge for several miles until it curves like a fishhook around the base of Sourdough Peak.

Upon reaching the creek, the racers would dump their packs, inflate the boats they carried with them, then shoot down 10 miles of rapids to Kennicott’s sister town of McCarthy. The finish line, not coincidentally, was right in front of the town’s only bar.

The boat carried by each of these racers is the 21st century incarnation of a design concept that’s been around for a couple decades now. Packrafts are lightweight (about 5 pounds), compact, and easily stuffed into a backpack. Essentially, they’re super-tough one-person rubber rafts, the diminutive cousins of the 16- and 20-footers used for more mainstream river trips. Small size is the secret of their advantage: A packraft gives the wilderness traveler the sort of amphibious capability that humans have longed for since the earliest days of our species. A hundred years ago, your only real option was to build a raft or hope you could find a canoe cached on your side of the river. Now, with a packraft, the backcountry trekker can go virtually anywhere, including a fast boogie up and over a mountain, then downstream through some substantial whitewater in time for beer-thirty.

Montepare welcomed all the racers and laid out the rules in front of the Kennicott Wilderness Guides main office; then head ranger Stephens Harper got up and delivered a safety and environmental briefing every bit as mandatory as the helmet and drysuit each racer was required to have. The first annual McCarthy Creek Packraft Race, which started as a way for Kennicott Wilderness to promote its guiding business and have some fun, had grown in importance from being just a bunch of whitewater bums looking for a thrill.

Pretty much by accident, the company and its owners, as well as the racers, found themselves front and center in a rancorous debate over land use, backcountry permitting and public lands policy taking place thousands of miles from the Wrangell Mountains. The jaundiced eyes of nonprofit conservation groups were watching.

McCarthy Creek parallels the spine of Bonanza Ridge for several miles until it curves like a fishhook around the base of Sourdough Peak.

McCarthy Creek parallels the spine of Bonanza Ridge for several miles until it curves like a fishhook around the base of Sourdough Peak.Upon reaching the creek, the racers would dump their packs, inflate the boats they carried with them, then shoot down 10 miles of rapids to Kennicott’s sister town of McCarthy. The finish line, not coincidentally, was right in front of the town’s only bar.

The boat carried by each of these racers is the 21st century incarnation of a design concept that’s been around for a couple decades now. Packrafts are lightweight (about 5 pounds), compact, and easily stuffed into a backpack. Essentially, they’re super-tough one-person rubber rafts, the diminutive cousins of the 16- and 20-footers used for more mainstream river trips. Small size is the secret of their advantage: A packraft gives the wilderness traveler the sort of amphibious capability that humans have longed for since the earliest days of our species. A hundred years ago, your only real option was to build a raft or hope you could find a canoe cached on your side of the river. Now, with a packraft, the backcountry trekker can go virtually anywhere, including a fast boogie up and over a mountain, then downstream through some substantial whitewater in time for beer-thirty.

Montepare welcomed all the racers and laid out the rules in front of the Kennicott Wilderness Guides main office; then head ranger Stephens Harper got up and delivered a safety and environmental briefing every bit as mandatory as the helmet and drysuit each racer was required to have. The first annual McCarthy Creek Packraft Race, which started as a way for Kennicott Wilderness to promote its guiding business and have some fun, had grown in importance from being just a bunch of whitewater bums looking for a thrill.

Pretty much by accident, the company and its owners, as well as the racers, found themselves front and center in a rancorous debate over land use, backcountry permitting and public lands policy taking place thousands of miles from the Wrangell Mountains. The jaundiced eyes of nonprofit conservation groups were watching.

Grand Canyon episode

Back in 2011, an erstwhile river warrior hiked down into the Grand Canyon with a packraft, blew it up, and shoved off into Hance, one of the longer and more difficult rapids. Within seconds he’d dumped his boat and was being sucked down into the gorge below while his girlfriend stood helplessly on the bank.

He made it out by the skin of his teeth, with the whole thing on tape, thanks to a GoPro cam. And, of course, what good is a near-drowning experience if you haven’t posted the video on YouTube? It didn’t take long for the National Park Service staff at the Grand Canyon to see it and decide, based on this one incident, that packrafters were a menace both to themselves and to public lands. The video was pulled after a few days but the damage was done.

This tale might seem familiar to readers here in Alaska, given the recent tragic death of Rob Kehrer while packrafting in Wrangell-St. Elias as part of the Alaska Wilderness Classic race. Earlier this month he launched his packraft into the treacherous Tana River and disappeared behind a wall of whitewater. His body was found on a gravel bar downstream.

One can understand how NPS managers might take a dim view of packrafters in their parks, given events such as these. But as with most thorny management issues, there is a lot more to the story than those few incidents that make the headlines.

Back in 2011, an erstwhile river warrior hiked down into the Grand Canyon with a packraft, blew it up, and shoved off into Hance, one of the longer and more difficult rapids. Within seconds he’d dumped his boat and was being sucked down into the gorge below while his girlfriend stood helplessly on the bank.

He made it out by the skin of his teeth, with the whole thing on tape, thanks to a GoPro cam. And, of course, what good is a near-drowning experience if you haven’t posted the video on YouTube? It didn’t take long for the National Park Service staff at the Grand Canyon to see it and decide, based on this one incident, that packrafters were a menace both to themselves and to public lands. The video was pulled after a few days but the damage was done.

This tale might seem familiar to readers here in Alaska, given the recent tragic death of Rob Kehrer while packrafting in Wrangell-St. Elias as part of the Alaska Wilderness Classic race. Earlier this month he launched his packraft into the treacherous Tana River and disappeared behind a wall of whitewater. His body was found on a gravel bar downstream.

One can understand how NPS managers might take a dim view of packrafters in their parks, given events such as these. But as with most thorny management issues, there is a lot more to the story than those few incidents that make the headlines.

by Kris Farmen, Alaska Dispatch | Read more:

Image: Luc Mehl via:

What Your 1st-Grade Life Says About the Rest of It

In the beginning, when they knew just where to find everyone, they pulled the children out of their classrooms.

They sat in any quiet corner of the schools they could claim: the sociologists from Johns Hopkins and, one at a time, the excitable first-graders. Monica Jaundoo, whose parents never made it past the eighth grade. Danté Washington, a boy with a temper and a dad who drank too much. Ed Klein, who came from a poor white part of town where his mother sold cocaine.

They talked with the sociologists about teachers and report cards, about growing up to become rock stars or police officers. For many of the children, this seldom happened in raucous classrooms or overwhelmed homes: a quiet, one-on-one conversation with an adult eager to hear just about them. “I have this special friend,” Jaundoo thought as a 6-year-old, “who’s only talking to me.”

They talked with the sociologists about teachers and report cards, about growing up to become rock stars or police officers. For many of the children, this seldom happened in raucous classrooms or overwhelmed homes: a quiet, one-on-one conversation with an adult eager to hear just about them. “I have this special friend,” Jaundoo thought as a 6-year-old, “who’s only talking to me.”

Later, as the children grew and dispersed, some falling out of the school system and others leaving the city behind, the conversations took place in McDonald’s, in public libraries, in living rooms or lock-ups. The children — 790 of them, representative of the Baltimore public school system’s first-grade class in 1982 — grew harder to track as the patterns among them became clearer.

Over time, their lives were constrained — or cushioned — by the circumstances they were born into, by the employment and education prospects of their parents, by the addictions or job contacts that would become their economic inheritance. Johns Hopkins researchers Karl Alexander and Doris Entwisle watched as less than half of the group graduated high school on time. Before they turned 18, 40 percent of the black girls from low-income homes had given birth to their own babies. At the time of the final interviews, when the children were now adults of 28, more than 10 percent of the black men in the study were incarcerated. Twenty-six of the children, among those they could find at last count, were no longer living.

A mere 4 percent of the first-graders Alexander and Entwisle had classified as the “urban disadvantaged” had by the end of the study completed the college degree that’s become more valuable than ever in the modern economy. A related reality: Just 33 of 314 had left the low-income socioeconomic status of their parents for the middle class by age 28.

Today, the “kids” — as Alexander still calls them — are 37 or 38. Alexander, now 68, retired from Johns Hopkins this summer just as the final, encompassing book from the 25-year study was published. Entwisle, then 89, died of lung cancer last November shortly after the final revisions on the book. Its sober title, “The Long Shadow,” names the thread running through all those numbers and conversations: The families and neighborhoods these children were born into cast a heavy influence over the rest of their lives, from how they fared in the first grade to what they became as grownups.

Some of them — children largely from the middle-class and blue-collar white families still in Baltimore’s public school system in 1982 — grew up to managerial jobs and marriages and their own stable homes. But where success occurred, it was often passed down, through family resources or networks simply out of reach of most of the disadvantaged.

Collectively, the study of their lives, and the outliers among them, tells an unusually detailed story — both empirical and intimate — of the forces that surround and steer children growing up in a post-industrial city like Baltimore.

“The kids they followed grew up in the worst era for big cities in the U.S. at any point in our history,” says Patrick Sharkey, a sociologist at New York University familiar with the research. Their childhood spanned the crack epidemic, the decline of urban industry, the waning national interest in inner cities and the war on poverty.

In that sense, this study is also about Baltimore itself — how it appeared to researchers and their subjects, to children and the adults they would later become.

They sat in any quiet corner of the schools they could claim: the sociologists from Johns Hopkins and, one at a time, the excitable first-graders. Monica Jaundoo, whose parents never made it past the eighth grade. Danté Washington, a boy with a temper and a dad who drank too much. Ed Klein, who came from a poor white part of town where his mother sold cocaine.

They talked with the sociologists about teachers and report cards, about growing up to become rock stars or police officers. For many of the children, this seldom happened in raucous classrooms or overwhelmed homes: a quiet, one-on-one conversation with an adult eager to hear just about them. “I have this special friend,” Jaundoo thought as a 6-year-old, “who’s only talking to me.”

They talked with the sociologists about teachers and report cards, about growing up to become rock stars or police officers. For many of the children, this seldom happened in raucous classrooms or overwhelmed homes: a quiet, one-on-one conversation with an adult eager to hear just about them. “I have this special friend,” Jaundoo thought as a 6-year-old, “who’s only talking to me.”Later, as the children grew and dispersed, some falling out of the school system and others leaving the city behind, the conversations took place in McDonald’s, in public libraries, in living rooms or lock-ups. The children — 790 of them, representative of the Baltimore public school system’s first-grade class in 1982 — grew harder to track as the patterns among them became clearer.

Over time, their lives were constrained — or cushioned — by the circumstances they were born into, by the employment and education prospects of their parents, by the addictions or job contacts that would become their economic inheritance. Johns Hopkins researchers Karl Alexander and Doris Entwisle watched as less than half of the group graduated high school on time. Before they turned 18, 40 percent of the black girls from low-income homes had given birth to their own babies. At the time of the final interviews, when the children were now adults of 28, more than 10 percent of the black men in the study were incarcerated. Twenty-six of the children, among those they could find at last count, were no longer living.

A mere 4 percent of the first-graders Alexander and Entwisle had classified as the “urban disadvantaged” had by the end of the study completed the college degree that’s become more valuable than ever in the modern economy. A related reality: Just 33 of 314 had left the low-income socioeconomic status of their parents for the middle class by age 28.

Today, the “kids” — as Alexander still calls them — are 37 or 38. Alexander, now 68, retired from Johns Hopkins this summer just as the final, encompassing book from the 25-year study was published. Entwisle, then 89, died of lung cancer last November shortly after the final revisions on the book. Its sober title, “The Long Shadow,” names the thread running through all those numbers and conversations: The families and neighborhoods these children were born into cast a heavy influence over the rest of their lives, from how they fared in the first grade to what they became as grownups.

Some of them — children largely from the middle-class and blue-collar white families still in Baltimore’s public school system in 1982 — grew up to managerial jobs and marriages and their own stable homes. But where success occurred, it was often passed down, through family resources or networks simply out of reach of most of the disadvantaged.

Collectively, the study of their lives, and the outliers among them, tells an unusually detailed story — both empirical and intimate — of the forces that surround and steer children growing up in a post-industrial city like Baltimore.

“The kids they followed grew up in the worst era for big cities in the U.S. at any point in our history,” says Patrick Sharkey, a sociologist at New York University familiar with the research. Their childhood spanned the crack epidemic, the decline of urban industry, the waning national interest in inner cities and the war on poverty.

In that sense, this study is also about Baltimore itself — how it appeared to researchers and their subjects, to children and the adults they would later become.

by Emily Badger, Washington Post | Read more:

Image: Linda Davidson/The Washington PostThe Dawn of the Post-Clinic Abortion

In June 2001, under a cloud-streaked sky, Rebecca Gomperts set out from the Dutch port of Scheveningen in a rented 110-foot ship bound for Ireland. Lashed to the deck was a shipping container, freshly painted light blue and stocked with packets of mifepristone (which used to be called RU-486) and misoprostol. The pills are given to women in the first trimester to induce a miscarriage. Medical abortion, as this procedure is called, had recently become available in the Netherlands. But use of misoprostol and mifepristone to end a pregnancy was illegal in Ireland, where abortion by any means remains against the law, with few exceptions.

Gomperts is a general-practice physician and activist. She first assisted with an abortion 20 years ago on a trip to Guinea, just before she finished medical school in Amsterdam. Three years later, Gomperts went to work as a ship’s doctor on a Greenpeace vessel. Landing in Mexico, she met a girl who was raising her younger siblings because her mother had died during a botched illegal abortion. When the ship traveled to Costa Rica and Panama, women told her about hardships they suffered because they didn’t have access to the procedure. “It was not part of my medical training to talk about illegal abortion and the public-health impact it has,” Gomperts told me this summer. “In those intense discussions with women, it really hit me.”

When she returned to the Netherlands, Gomperts decided she wanted to figure out how to help women like the ones she had met. She did some legal and medical research and concluded that in a Dutch-registered ship governed by Dutch law, she could sail into the harbor of a country where abortion is illegal, take women on board, bring them into international waters, give them the pills at sea and send them home to miscarry. Calling the effort Women on Waves, she chose Dublin as her first destination.

When she returned to the Netherlands, Gomperts decided she wanted to figure out how to help women like the ones she had met. She did some legal and medical research and concluded that in a Dutch-registered ship governed by Dutch law, she could sail into the harbor of a country where abortion is illegal, take women on board, bring them into international waters, give them the pills at sea and send them home to miscarry. Calling the effort Women on Waves, she chose Dublin as her first destination.

Ten women each gave Gomperts 10,000 Dutch guilders (about $5,500), part of the money needed to rent a boat and pay for a crew. But to comply with Dutch law, she also had to build a mobile abortion clinic. Tapping contacts she made a decade earlier, when she attended art school at night while studying medicine, she got in touch with Joep van Lieshout, a well-known Dutch artist, and persuaded him to design the clinic. They applied for funds from the national arts council and built it together inside the shipping container. When the transport ministry threatened to revoke the ship’s authorization because of the container on deck, van Lieshout faxed them a certificate decreeing the clinic a functional work of art, titled “a-portable.” The ship was allowed to sail, and van Lieshout later showed a mock-up of the clinic at the Venice Biennale.

As the boat sailed toward Dublin, Gomperts and her shipmates readied their store of pills and fielded calls from the press and emails from hundreds of Irish women seeking appointments. The onslaught of interest took them by surprise. So did a controversy that was starting to brew back home. Conservative politicians in the Netherlands denounced Gomperts for potentially breaking a law that required a special license for any doctor to provide an abortion after six and a half weeks of pregnancy. Gomperts had applied for it a few months earlier and received no reply. She set sail anyway, planning to perform abortions only up to six and a half weeks if the license did not come through.

When Gomperts’s ship docked in Dublin, she still didn’t have the license. Irish women’s groups were divided over what to do. Gomperts decided she couldn’t go ahead without their united support and told a group of reporters and protesters that she wouldn’t be able to give out a single pill. “This is just the first of many trips that we plan to make,” she said from the shore, wrapped in a blanket, a scene that is captured in “Vessel,” a documentary about her work that will be released this winter. Gomperts was accused of misleading women. A headline in The Telegraph in London read: “Abortion Boat Admits Dublin Voyage Was a Publicity Sham.”

Gomperts set sail again two years later, this time resolving to perform abortions only up to six and a half weeks. She went to Poland first and to Portugal in 2004. The Portuguese minister of defense sent two warships to stop the boat, then just 12 miles offshore, from entering national waters. No local boat could be found to ferry out the women who were waiting onshore. “In the beginning we were very pissed off, thinking the campaign was failing because the ship couldn’t get in,” one Portuguese activist says in “Vessel.” “But at a certain point, we realized that was the best thing that could ever happen. Because we had media coverage from everywhere.”

Without consulting her local allies, Gomperts changed strategy. She appeared on a Portuguese talk show, held up a pack of pills on-screen and explained exactly how women could induce an abortion at home — specifying the number of pills they needed to take, at intervals, and warning that they might feel pain. A Portuguese anti-abortion campaigner who was also on the show challenged the ship’s operation on legal grounds. “Excuse me,” Gomperts said. “I really think you should not talk about things that you don’t know anything about, O.K. . . . I know what I can do within the law.” Looking directly at him, she added, “Concerning pregnancy, you’re a man, you can walk away when your girlfriend is pregnant. I’m pregnant now, and I had an abortion when I was — a long time ago. And I’m very happy that I have the choice to continue my pregnancy how I want, and that I had the choice to end it when I needed it.” She pointed at the man. “You have never given birth, so you don’t know what it means to do that.”

Two and a half years later, Portugal legalized abortion. As word of Gomperts’s TV appearance spread, activists in other countries saw it as a breakthrough. Gomperts had communicated directly to women what was still, in many places, a well-kept secret: There were pills on the market with the power to end a pregnancy. Emails from women all over the world poured into Women on Waves, asking about the medication and how to get it. Gomperts wanted to help women “give themselves permission” to take the pills, as she puts it, with as little involvement by the government, or the medical profession, as possible. She realized that there was an easier way to do this than showing up in a port. She didn’t need a ship. She just needed the Internet.

Gomperts is a general-practice physician and activist. She first assisted with an abortion 20 years ago on a trip to Guinea, just before she finished medical school in Amsterdam. Three years later, Gomperts went to work as a ship’s doctor on a Greenpeace vessel. Landing in Mexico, she met a girl who was raising her younger siblings because her mother had died during a botched illegal abortion. When the ship traveled to Costa Rica and Panama, women told her about hardships they suffered because they didn’t have access to the procedure. “It was not part of my medical training to talk about illegal abortion and the public-health impact it has,” Gomperts told me this summer. “In those intense discussions with women, it really hit me.”

When she returned to the Netherlands, Gomperts decided she wanted to figure out how to help women like the ones she had met. She did some legal and medical research and concluded that in a Dutch-registered ship governed by Dutch law, she could sail into the harbor of a country where abortion is illegal, take women on board, bring them into international waters, give them the pills at sea and send them home to miscarry. Calling the effort Women on Waves, she chose Dublin as her first destination.

When she returned to the Netherlands, Gomperts decided she wanted to figure out how to help women like the ones she had met. She did some legal and medical research and concluded that in a Dutch-registered ship governed by Dutch law, she could sail into the harbor of a country where abortion is illegal, take women on board, bring them into international waters, give them the pills at sea and send them home to miscarry. Calling the effort Women on Waves, she chose Dublin as her first destination.Ten women each gave Gomperts 10,000 Dutch guilders (about $5,500), part of the money needed to rent a boat and pay for a crew. But to comply with Dutch law, she also had to build a mobile abortion clinic. Tapping contacts she made a decade earlier, when she attended art school at night while studying medicine, she got in touch with Joep van Lieshout, a well-known Dutch artist, and persuaded him to design the clinic. They applied for funds from the national arts council and built it together inside the shipping container. When the transport ministry threatened to revoke the ship’s authorization because of the container on deck, van Lieshout faxed them a certificate decreeing the clinic a functional work of art, titled “a-portable.” The ship was allowed to sail, and van Lieshout later showed a mock-up of the clinic at the Venice Biennale.

As the boat sailed toward Dublin, Gomperts and her shipmates readied their store of pills and fielded calls from the press and emails from hundreds of Irish women seeking appointments. The onslaught of interest took them by surprise. So did a controversy that was starting to brew back home. Conservative politicians in the Netherlands denounced Gomperts for potentially breaking a law that required a special license for any doctor to provide an abortion after six and a half weeks of pregnancy. Gomperts had applied for it a few months earlier and received no reply. She set sail anyway, planning to perform abortions only up to six and a half weeks if the license did not come through.

When Gomperts’s ship docked in Dublin, she still didn’t have the license. Irish women’s groups were divided over what to do. Gomperts decided she couldn’t go ahead without their united support and told a group of reporters and protesters that she wouldn’t be able to give out a single pill. “This is just the first of many trips that we plan to make,” she said from the shore, wrapped in a blanket, a scene that is captured in “Vessel,” a documentary about her work that will be released this winter. Gomperts was accused of misleading women. A headline in The Telegraph in London read: “Abortion Boat Admits Dublin Voyage Was a Publicity Sham.”

Gomperts set sail again two years later, this time resolving to perform abortions only up to six and a half weeks. She went to Poland first and to Portugal in 2004. The Portuguese minister of defense sent two warships to stop the boat, then just 12 miles offshore, from entering national waters. No local boat could be found to ferry out the women who were waiting onshore. “In the beginning we were very pissed off, thinking the campaign was failing because the ship couldn’t get in,” one Portuguese activist says in “Vessel.” “But at a certain point, we realized that was the best thing that could ever happen. Because we had media coverage from everywhere.”

Without consulting her local allies, Gomperts changed strategy. She appeared on a Portuguese talk show, held up a pack of pills on-screen and explained exactly how women could induce an abortion at home — specifying the number of pills they needed to take, at intervals, and warning that they might feel pain. A Portuguese anti-abortion campaigner who was also on the show challenged the ship’s operation on legal grounds. “Excuse me,” Gomperts said. “I really think you should not talk about things that you don’t know anything about, O.K. . . . I know what I can do within the law.” Looking directly at him, she added, “Concerning pregnancy, you’re a man, you can walk away when your girlfriend is pregnant. I’m pregnant now, and I had an abortion when I was — a long time ago. And I’m very happy that I have the choice to continue my pregnancy how I want, and that I had the choice to end it when I needed it.” She pointed at the man. “You have never given birth, so you don’t know what it means to do that.”

Two and a half years later, Portugal legalized abortion. As word of Gomperts’s TV appearance spread, activists in other countries saw it as a breakthrough. Gomperts had communicated directly to women what was still, in many places, a well-kept secret: There were pills on the market with the power to end a pregnancy. Emails from women all over the world poured into Women on Waves, asking about the medication and how to get it. Gomperts wanted to help women “give themselves permission” to take the pills, as she puts it, with as little involvement by the government, or the medical profession, as possible. She realized that there was an easier way to do this than showing up in a port. She didn’t need a ship. She just needed the Internet.

Does It Help to Know History?

About a year ago, I wrote about some attempts to explain why anyone would, or ought to, study English in college. The point, I thought, was not that studying English gives anyone some practical advantage on non-English majors, but that it enables us to enter, as equals, into a long existing, ongoing conversation. It isn’t productive in a tangible sense; it’s productive in a human sense. The action, whether rewarded or not, really is its own reward. The activity is the answer.

It might be worth asking similar questions about the value of studying, or at least, reading, history these days, since it is a subject that comes to mind many mornings on the op-ed page. Every writer, of every political flavor, has some neat historical analogy, or mini-lesson, with which to preface an argument for why we ought to bomb these guys or side with those guys against the guys we were bombing before. But the best argument for reading history is not that it will show us the right thing to do in one case or the other, but rather that it will show us why even doing the right thing rarely works out. The advantage of having a historical sense is not that it will lead you to some quarry of instructions, the way that Superman can regularly return to the Fortress of Solitude to get instructions from his dad, but that it will teach you that no such crystal cave exists. What history generally “teaches” is how hard it is for anyone to control it, including the people who think they’re making it.

It might be worth asking similar questions about the value of studying, or at least, reading, history these days, since it is a subject that comes to mind many mornings on the op-ed page. Every writer, of every political flavor, has some neat historical analogy, or mini-lesson, with which to preface an argument for why we ought to bomb these guys or side with those guys against the guys we were bombing before. But the best argument for reading history is not that it will show us the right thing to do in one case or the other, but rather that it will show us why even doing the right thing rarely works out. The advantage of having a historical sense is not that it will lead you to some quarry of instructions, the way that Superman can regularly return to the Fortress of Solitude to get instructions from his dad, but that it will teach you that no such crystal cave exists. What history generally “teaches” is how hard it is for anyone to control it, including the people who think they’re making it.

Roger Cohen, for instance, wrote on Wednesday about all the mistakes that the United States is supposed to have made in the Middle East over the past decade, with the implicit notion that there are two histories: one recent, in which everything that the United States has done has been ill-timed and disastrous; and then some other, superior, alternate history, in which imperial Western powers sagaciously, indeed, surgically, intervened in the region, wisely picking the right sides and thoughtful leaders, promoting militants without aiding fanaticism, and generally aiding the cause of peace and prosperity. This never happened. As the Libyan intervention demonstrates, the best will in the world—and, seemingly, the best candidates for our support—can’t cure broken polities quickly. What “history” shows is that the same forces that led to the Mahdi’s rebellion in Sudan more than a century ago—rage at the presence of a colonial master; a mad turn towards an imaginary past as a means to equal the score—keep coming back and remain just as resistant to management, close up or at a distance, as they did before. ISIS is a horrible group doing horrible things, and there are many factors behind its rise. But they came to be a threat and a power less because of all we didn’t do than because of certain things we did do—foremost among them that massive, forward intervention, the Iraq War. (The historical question to which ISIS is the answer is: What could possibly be worse than Saddam Hussein?)

Another, domestic example of historical blindness is the current cult of the political hypersagacity of Lyndon B. Johnson. L.B.J. was indeed a ruthless political operator and, when he had big majorities, got big bills passed—the Civil Rights Act, for one. He also engineered, and masterfully bullied through Congress, the Vietnam War, a moral and strategic catastrophe that ripped the United States apart and, more important, visited a kind of hell on the Vietnamese. It also led American soldiers to commit war crimes, almost all left unpunished, of a kind that it still shrivels the heart to read about. Johnson did many good things, but to use him as a positive counterexample of leadership to Barack Obama or anyone else is marginally insane.

Johnson’s tragedy was critically tied to the cult of action, of being tough and not just sitting there and watching. But not doing things too disastrously is not some minimal achievement; it is a maximal achievement, rarely managed. Studying history doesn’t argue for nothing-ism, but it makes a very good case for minimalism: for doing the least violent thing possible that might help prevent more violence from happening.

The real sin that the absence of a historical sense encourages is presentism, in the sense of exaggerating our present problems out of all proportion to those that have previously existed. It lies in believing that things are much worse than they have ever been—and, thus, than they really are—or are uniquely threatening rather than familiarly difficult. Every episode becomes an epidemic, every image is turned into a permanent injury, and each crisis is a historical crisis in need of urgent aggressive handling—even if all experience shows that aggressive handling of such situations has in the past, quite often made things worse. (The history of medicine is that no matter how many interventions are badly made, the experts who intervene make more: the sixteenth-century doctors who bled and cupped their patients and watched them die just bled and cupped others more.) What history actually shows is that nothing works out as planned, and that everything has unintentional consequences. History doesn’t show that we should never go to war—sometimes there’s no better alternative. But it does show that the results are entirely uncontrollable, and that we are far more likely to be made by history than to make it. History is past, and singular, and the same year never comes round twice.

It might be worth asking similar questions about the value of studying, or at least, reading, history these days, since it is a subject that comes to mind many mornings on the op-ed page. Every writer, of every political flavor, has some neat historical analogy, or mini-lesson, with which to preface an argument for why we ought to bomb these guys or side with those guys against the guys we were bombing before. But the best argument for reading history is not that it will show us the right thing to do in one case or the other, but rather that it will show us why even doing the right thing rarely works out. The advantage of having a historical sense is not that it will lead you to some quarry of instructions, the way that Superman can regularly return to the Fortress of Solitude to get instructions from his dad, but that it will teach you that no such crystal cave exists. What history generally “teaches” is how hard it is for anyone to control it, including the people who think they’re making it.

It might be worth asking similar questions about the value of studying, or at least, reading, history these days, since it is a subject that comes to mind many mornings on the op-ed page. Every writer, of every political flavor, has some neat historical analogy, or mini-lesson, with which to preface an argument for why we ought to bomb these guys or side with those guys against the guys we were bombing before. But the best argument for reading history is not that it will show us the right thing to do in one case or the other, but rather that it will show us why even doing the right thing rarely works out. The advantage of having a historical sense is not that it will lead you to some quarry of instructions, the way that Superman can regularly return to the Fortress of Solitude to get instructions from his dad, but that it will teach you that no such crystal cave exists. What history generally “teaches” is how hard it is for anyone to control it, including the people who think they’re making it.Roger Cohen, for instance, wrote on Wednesday about all the mistakes that the United States is supposed to have made in the Middle East over the past decade, with the implicit notion that there are two histories: one recent, in which everything that the United States has done has been ill-timed and disastrous; and then some other, superior, alternate history, in which imperial Western powers sagaciously, indeed, surgically, intervened in the region, wisely picking the right sides and thoughtful leaders, promoting militants without aiding fanaticism, and generally aiding the cause of peace and prosperity. This never happened. As the Libyan intervention demonstrates, the best will in the world—and, seemingly, the best candidates for our support—can’t cure broken polities quickly. What “history” shows is that the same forces that led to the Mahdi’s rebellion in Sudan more than a century ago—rage at the presence of a colonial master; a mad turn towards an imaginary past as a means to equal the score—keep coming back and remain just as resistant to management, close up or at a distance, as they did before. ISIS is a horrible group doing horrible things, and there are many factors behind its rise. But they came to be a threat and a power less because of all we didn’t do than because of certain things we did do—foremost among them that massive, forward intervention, the Iraq War. (The historical question to which ISIS is the answer is: What could possibly be worse than Saddam Hussein?)

Another, domestic example of historical blindness is the current cult of the political hypersagacity of Lyndon B. Johnson. L.B.J. was indeed a ruthless political operator and, when he had big majorities, got big bills passed—the Civil Rights Act, for one. He also engineered, and masterfully bullied through Congress, the Vietnam War, a moral and strategic catastrophe that ripped the United States apart and, more important, visited a kind of hell on the Vietnamese. It also led American soldiers to commit war crimes, almost all left unpunished, of a kind that it still shrivels the heart to read about. Johnson did many good things, but to use him as a positive counterexample of leadership to Barack Obama or anyone else is marginally insane.

Johnson’s tragedy was critically tied to the cult of action, of being tough and not just sitting there and watching. But not doing things too disastrously is not some minimal achievement; it is a maximal achievement, rarely managed. Studying history doesn’t argue for nothing-ism, but it makes a very good case for minimalism: for doing the least violent thing possible that might help prevent more violence from happening.

The real sin that the absence of a historical sense encourages is presentism, in the sense of exaggerating our present problems out of all proportion to those that have previously existed. It lies in believing that things are much worse than they have ever been—and, thus, than they really are—or are uniquely threatening rather than familiarly difficult. Every episode becomes an epidemic, every image is turned into a permanent injury, and each crisis is a historical crisis in need of urgent aggressive handling—even if all experience shows that aggressive handling of such situations has in the past, quite often made things worse. (The history of medicine is that no matter how many interventions are badly made, the experts who intervene make more: the sixteenth-century doctors who bled and cupped their patients and watched them die just bled and cupped others more.) What history actually shows is that nothing works out as planned, and that everything has unintentional consequences. History doesn’t show that we should never go to war—sometimes there’s no better alternative. But it does show that the results are entirely uncontrollable, and that we are far more likely to be made by history than to make it. History is past, and singular, and the same year never comes round twice.

by Adam Gopnik, New Yorker | Read more:

Image: Nathan Huang

Saturday, August 30, 2014

Predictive First: How A New Era Of Apps Will Change The Game

Over the past several decades, enterprise technology has consistently followed a trail that’s been blazed by top consumer tech brands. This has certainly been true of delivery models – first there were software CDs, then the cloud, and now all kinds of mobile apps. In tandem with this shift, the way we build applications has changed and we’re increasingly learning the benefits of taking a mobile-first approach to software development.

Case in point: Facebook, which of course began as a desktop app, struggled to keep up with emerging mobile-first experiences like Instagram and WhatsApp, and ended up acquiring them for billions of dollars to play catch up.

Case in point: Facebook, which of course began as a desktop app, struggled to keep up with emerging mobile-first experiences like Instagram and WhatsApp, and ended up acquiring them for billions of dollars to play catch up.

The Predictive-First Revolution

Recent events like the acquisition of RelateIQ by Salesforce demonstrate that we’re at the beginning of another shift toward a new age of predictive-first applications. The value of data science and predictive analytics has been proven again and again in the consumer landscape by products like Siri, Waze and Pandora.

Big consumer brands are going even deeper, investing in artificial intelligence (AI) models such as “deep learning.” Earlier this year, Google spent $400 million to snap up AI company DeepMind, and just a few weeks ago, Twitter bought another sophisticated machine-learning startup called MadBits. Even Microsoft is jumping on the bandwagon, with claims that its “Project Adam” network is faster than the leading AI system, Google Brain, and that its Cortana virtual personal assistant is smarter than Apple’s Siri.

The battle for the best data science is clearly underway. Expect even more data-intelligent applications to emerge beyond the ones you use every day like Google web search. In fact, this shift is long overdue for enterprise software.

Predictive-first developers are well poised to overtake the incumbents because predictive apps enable people to work smarter and reduce their workloads even more dramatically than last decade’s basic data bookkeeping approaches to customer relationship management, enterprise resource planning and human resources systems.

Look at how Bluenose is using predictive analytics to help companies engage at-risk customers and identify drivers of churn, how Stripe’s payments solution is leveraging machine learning to detect fraud, or how Gild is mining big data to help companies identify the best talent.

These products are revolutionizing how companies operate by using machine learning and predictive modeling techniques to factor in thousands of signals about whatever problem a business is trying to solve, and feeding that insight directly into day-to-day decision workflows. But predictive technologies aren’t the kind of tools you can just add later. Developers can’t bolt predictive onto CRM, marketing automation, applicant tracking, or payroll platforms after the fact. You need to think predictive from day one to fully reap the benefits.

Recent events like the acquisition of RelateIQ by Salesforce demonstrate that we’re at the beginning of another shift toward a new age of predictive-first applications. The value of data science and predictive analytics has been proven again and again in the consumer landscape by products like Siri, Waze and Pandora.

Big consumer brands are going even deeper, investing in artificial intelligence (AI) models such as “deep learning.” Earlier this year, Google spent $400 million to snap up AI company DeepMind, and just a few weeks ago, Twitter bought another sophisticated machine-learning startup called MadBits. Even Microsoft is jumping on the bandwagon, with claims that its “Project Adam” network is faster than the leading AI system, Google Brain, and that its Cortana virtual personal assistant is smarter than Apple’s Siri.

The battle for the best data science is clearly underway. Expect even more data-intelligent applications to emerge beyond the ones you use every day like Google web search. In fact, this shift is long overdue for enterprise software.

Predictive-first developers are well poised to overtake the incumbents because predictive apps enable people to work smarter and reduce their workloads even more dramatically than last decade’s basic data bookkeeping approaches to customer relationship management, enterprise resource planning and human resources systems.

Look at how Bluenose is using predictive analytics to help companies engage at-risk customers and identify drivers of churn, how Stripe’s payments solution is leveraging machine learning to detect fraud, or how Gild is mining big data to help companies identify the best talent.

These products are revolutionizing how companies operate by using machine learning and predictive modeling techniques to factor in thousands of signals about whatever problem a business is trying to solve, and feeding that insight directly into day-to-day decision workflows. But predictive technologies aren’t the kind of tools you can just add later. Developers can’t bolt predictive onto CRM, marketing automation, applicant tracking, or payroll platforms after the fact. You need to think predictive from day one to fully reap the benefits.

by Vik Singh, TechCrunch | Read more:

Image: Holger Neimann

Friday, August 29, 2014

Seven Days and Nights in the World's Largest, and Rowdiest Retirement Community

[ed. I've posted about The Villages before, but this is another good, ground-level perspective. Obviously, the alternative community's raison d'etre strikes a resonant chord with a number of people. Not me.]

Seventy miles northwest of Orlando International Airport, amid the sprawling, flat central Florida nothingness — past all of those billboards for Jesus and unborn fetuses and boiled peanuts and gator meat — springs up a town called Wildwood. Storefront churches. O’Shucks Oyster Bar. Family Dollar. Nordic Gun & Pawn. A community center with a playground overgrown by weeds. Vast swaths of tree-dotted pastureland. This area used to be the very center of Florida’s now fast-disappearing cattle industry. The houses are low-slung, pale stucco. One has a weight bench in the yard. There’s a rail yard crowded with static freight trains. The owners of a dingy single-wide proudly fly the stars and bars.

Seventy miles northwest of Orlando International Airport, amid the sprawling, flat central Florida nothingness — past all of those billboards for Jesus and unborn fetuses and boiled peanuts and gator meat — springs up a town called Wildwood. Storefront churches. O’Shucks Oyster Bar. Family Dollar. Nordic Gun & Pawn. A community center with a playground overgrown by weeds. Vast swaths of tree-dotted pastureland. This area used to be the very center of Florida’s now fast-disappearing cattle industry. The houses are low-slung, pale stucco. One has a weight bench in the yard. There’s a rail yard crowded with static freight trains. The owners of a dingy single-wide proudly fly the stars and bars.

And then, suddenly, unexpectedly, Wildwood’s drabness explodes into green Southern splendor: majestic oaks bearing spindly fronds of Spanish moss that hang down almost to the ground. What was once rolling pasture land has been leveled with clay and sand. Acres of palmetto, hummock, and pine forest clear-cut and covered with vivid sod. All around me, old men drive golf carts styled to look like German luxury automobiles or that have tinted windows and enclosures to guard against the morning chill, along a wide, paved cart path. It’s a bizarre sensation, like happening upon a geriatric man’s vision of heaven itself. I have just entered The Villages.

This is one of the fastest-growing small cities in America, a place so intoxicating that weekend visitors frequently impulse-purchase $200,000 homes. The community real estate office sells about 250 houses every month. The grass is always a deep Pakistan green. The sunrises and sunsets are so intensely pink and orange and red they look computer-enhanced. The water in the public pools is always the perfect temperature. Residents can play golf on one of 40 courses every day for free. Happy hour begins at 11 a.m. Musical entertainment can be found in three town squares 365 nights a year. It’s landlocked but somehow still feels coastal. There’s no (visible) poverty or suffering. Free, consensual, noncommittal sex with a new partner every night is an option. There’s zero litter or dog shit on the sidewalks and hardly any crime and the laws governing the outside world don’t seem to apply here. You can be the you you’ve always dreamed of.

One hundred thousand souls over the age of 55 live here, packed into 54,000 homes spread over 32 square miles and three counties, a greater expanse of land than the island of Manhattan. Increasingly, this is how Americans are spending their golden years — not in the cities and towns where they established their roots, but in communities with people their own age, with similar interests and values. Trailer parks are popping up outside the gates; my aunt and uncle spend the summer months in western Pennsylvania in a gated 55-plus community, and when the weather turns they live in one through the winter to play golf and line-dance in the town squares.

There are people, younger than 55, generally, who suspect that this all seems too good to be true. They — we — point to the elusive, all-powerful billionaire developer who lords over, and profits from, every aspect of his residents’ lives; or the ersatz approximation of some never-realized Main Street USA idyll — so white, so safe — exemplified by Mitt Romney’s tone-deaf rendition of “God Bless America,”performed at one of his many campaign swings through The Villages. But those who live and will likely die here and who feel they’ve earned the right to indulge themselves aren’t anguishing over it. I am living here for a week to figure out if The Villages is a supersize, reinvigorated vision of the American dream, or a caricature, or if there’s even a difference. The question I’m here to try to answer is a scary one: How do we want to finish our lives?

Seventy miles northwest of Orlando International Airport, amid the sprawling, flat central Florida nothingness — past all of those billboards for Jesus and unborn fetuses and boiled peanuts and gator meat — springs up a town called Wildwood. Storefront churches. O’Shucks Oyster Bar. Family Dollar. Nordic Gun & Pawn. A community center with a playground overgrown by weeds. Vast swaths of tree-dotted pastureland. This area used to be the very center of Florida’s now fast-disappearing cattle industry. The houses are low-slung, pale stucco. One has a weight bench in the yard. There’s a rail yard crowded with static freight trains. The owners of a dingy single-wide proudly fly the stars and bars.

Seventy miles northwest of Orlando International Airport, amid the sprawling, flat central Florida nothingness — past all of those billboards for Jesus and unborn fetuses and boiled peanuts and gator meat — springs up a town called Wildwood. Storefront churches. O’Shucks Oyster Bar. Family Dollar. Nordic Gun & Pawn. A community center with a playground overgrown by weeds. Vast swaths of tree-dotted pastureland. This area used to be the very center of Florida’s now fast-disappearing cattle industry. The houses are low-slung, pale stucco. One has a weight bench in the yard. There’s a rail yard crowded with static freight trains. The owners of a dingy single-wide proudly fly the stars and bars.And then, suddenly, unexpectedly, Wildwood’s drabness explodes into green Southern splendor: majestic oaks bearing spindly fronds of Spanish moss that hang down almost to the ground. What was once rolling pasture land has been leveled with clay and sand. Acres of palmetto, hummock, and pine forest clear-cut and covered with vivid sod. All around me, old men drive golf carts styled to look like German luxury automobiles or that have tinted windows and enclosures to guard against the morning chill, along a wide, paved cart path. It’s a bizarre sensation, like happening upon a geriatric man’s vision of heaven itself. I have just entered The Villages.

This is one of the fastest-growing small cities in America, a place so intoxicating that weekend visitors frequently impulse-purchase $200,000 homes. The community real estate office sells about 250 houses every month. The grass is always a deep Pakistan green. The sunrises and sunsets are so intensely pink and orange and red they look computer-enhanced. The water in the public pools is always the perfect temperature. Residents can play golf on one of 40 courses every day for free. Happy hour begins at 11 a.m. Musical entertainment can be found in three town squares 365 nights a year. It’s landlocked but somehow still feels coastal. There’s no (visible) poverty or suffering. Free, consensual, noncommittal sex with a new partner every night is an option. There’s zero litter or dog shit on the sidewalks and hardly any crime and the laws governing the outside world don’t seem to apply here. You can be the you you’ve always dreamed of.

One hundred thousand souls over the age of 55 live here, packed into 54,000 homes spread over 32 square miles and three counties, a greater expanse of land than the island of Manhattan. Increasingly, this is how Americans are spending their golden years — not in the cities and towns where they established their roots, but in communities with people their own age, with similar interests and values. Trailer parks are popping up outside the gates; my aunt and uncle spend the summer months in western Pennsylvania in a gated 55-plus community, and when the weather turns they live in one through the winter to play golf and line-dance in the town squares.

There are people, younger than 55, generally, who suspect that this all seems too good to be true. They — we — point to the elusive, all-powerful billionaire developer who lords over, and profits from, every aspect of his residents’ lives; or the ersatz approximation of some never-realized Main Street USA idyll — so white, so safe — exemplified by Mitt Romney’s tone-deaf rendition of “God Bless America,”performed at one of his many campaign swings through The Villages. But those who live and will likely die here and who feel they’ve earned the right to indulge themselves aren’t anguishing over it. I am living here for a week to figure out if The Villages is a supersize, reinvigorated vision of the American dream, or a caricature, or if there’s even a difference. The question I’m here to try to answer is a scary one: How do we want to finish our lives?

Thursday, August 28, 2014

America’s Tech Guru Steps Down—But He’s Not Done Rebooting the Government

The White House confirmed today the rumors that Todd Park, the nation’s Chief Technical Officer and the spiritual leader of its effort to reform the way the government uses technology, is leaving his post. Largely for family reasons—a long delayed promise to his wife to raise their family in California—he’s moving back to the Bay Area he left when he began working for President Barack Obama in 2009.

But Park is not departing the government, just continuing his efforts on a more relevant coast. Starting in September, he’s assuming a new post, so new that the White House had to figure out what to call him. It finally settled on technology adviser to the White House based in Silicon Valley. But Park knows how he will describe himself: the dude in the Valley who’s working for the president. President Obama said in a statement, “Todd has been, and will continue to be, a key member of my administration.” Park will lead the effort to recruit top talent to help the federal government overhaul its IT. In a sense, he is doubling down on an initiative he’s already set well into motion: bringing a Silicon Valley sensibility to the public sector.

But Park is not departing the government, just continuing his efforts on a more relevant coast. Starting in September, he’s assuming a new post, so new that the White House had to figure out what to call him. It finally settled on technology adviser to the White House based in Silicon Valley. But Park knows how he will describe himself: the dude in the Valley who’s working for the president. President Obama said in a statement, “Todd has been, and will continue to be, a key member of my administration.” Park will lead the effort to recruit top talent to help the federal government overhaul its IT. In a sense, he is doubling down on an initiative he’s already set well into motion: bringing a Silicon Valley sensibility to the public sector.

It’s a continuation of what Park has already been doing for months. If you were at the surprisingly louche headquarters of the nonprofit Mozilla Foundation in Mountain View, California, one evening in June, you could have seen for yourself. Park was looking for recruits among the high-performing engineers of Silicon Valley, a group that generally ignores the government.

There were about a hundred of them, filling several lounges and conference rooms. As they waited, they nibbled on the free snacks and beverages from the open pantry; pizza would arrive later. Park, a middle-aged Asian American in a blue polo shirt approached a makeshift podium. Though he hates the spotlight, in events like these—where his passion for reforming the moribund state of government information technology flares—he has a surprising propensity for breathing fire.

“America needs you!” he said to the crowd. “Not a year from now! But Right. The. Fuck. Now!”

Indeed, America needs them, badly. Astonishing advances in computer technology and connectivity have dramatically transformed just about every aspect of society but government. Achievements that Internet companies seem to pull off effortlessly—innovative, easy-to-use services embraced by hundreds of millions of people—are tougher than Mars probes for federal agencies to execute. The recent history of government IT initiatives reads like a catalog of overspending, delays, and screwups. The Social Security Administration has spent six years and $300 million on a revamp of its disability-claim-filing process that still isn’t finished. The FBI took more than a decade to complete a case-filing system in 2012 at a cost of $670 million. And this summer a routine software update fried the State Department database used in processing visas; the fix took weeks, ruining travel plans for thousands.

Park knows the problem is systemic—a mindset that locks federal IT into obsolete practices—“a lot of people in government are, like, suspended in amber,” he said to the crowd at Mozilla. In the rest of the tech world, nimbleness, speed, risk-taking and relentless testing are second nature, essential to surviving in a competitive landscape that works to the benefit of consumers. But the federal government’s IT mentality is still rooted in caution, as if the digital transformation that has changed our lives is to be regarded with the utmost suspicion. It favors security over experimentation and adherence to bureaucratic procedure over agile problem-solving. That has led to an inherently sclerotic and corruptible system that doesn’t just hamper innovation, it leaves government IT permanently lagging, unable to perform even the most basic functions we expect. So it’s not at all surprising that the government has been unable to attract the world-class engineers who might be able to fix this mess, a fact that helps perpetuate a cycle of substandard services and poorly performing agencies that seems to confirm the canard that anything produced by government is prima facie lousy. “If we don’t get this right,” says Tom Freedman, coauthor of Future of Failure, a 61-page study on the subject for the Ford Foundation, “the future of governing effectively is in real question.”

No one believes this more deeply than Park, a Harvard-educated son of Korean immigrants. Mozilla board member and LinkedIn founder Reid Hoffman had secured the venue on short notice. (“I do what I can to help Todd,” Hoffman later explained. “We’re very fortunate to have him.”) Park, 41, founded two health IT companies—athenahealth and Castlight Health—and led them to successful IPOs before joining the Department of Health and Human Services in 2009 as CTO. In 2012, President Obama named him CTO of the entire US. Last fall, Park’s stress levels increased dramatically when he caught the hot-potato task of rebooting the disastrously dysfunctional HealthCare.gov website. But he was also given special emergency dispensation to ignore all the usual government IT procedures and strictures, permission that he used to pull together a so-called Ad Hoc team of Silicon Valley talent. The team ultimately rebooted the site and in the process provided a potential blueprint for reform. What if Park could duplicate this tech surge, creating similar squads of Silicon Valley types, parachuting them into bureaucracies to fix pressing tech problems? Could they actually clear the way for a golden era of gov-tech, where transformative apps were as likely to come from DC as they were from San Francisco or Mountain View, and people loved to use federal services as much as Googling and buying products on Amazon?

Park wants to move government IT into the open source, cloud-based, rapid-iteration environment that is second nature to the crowd considering his pitch tonight. The president has given reformers like him leave, he told them, “to bloweverything the fuck up and make it radically better.” This means taking on big-pocketed federal contractors, risk-averse bureaucrats, and politicians who may rail at overruns but thrive on contributions from those benefiting from the waste.

But Park is not departing the government, just continuing his efforts on a more relevant coast. Starting in September, he’s assuming a new post, so new that the White House had to figure out what to call him. It finally settled on technology adviser to the White House based in Silicon Valley. But Park knows how he will describe himself: the dude in the Valley who’s working for the president. President Obama said in a statement, “Todd has been, and will continue to be, a key member of my administration.” Park will lead the effort to recruit top talent to help the federal government overhaul its IT. In a sense, he is doubling down on an initiative he’s already set well into motion: bringing a Silicon Valley sensibility to the public sector.

But Park is not departing the government, just continuing his efforts on a more relevant coast. Starting in September, he’s assuming a new post, so new that the White House had to figure out what to call him. It finally settled on technology adviser to the White House based in Silicon Valley. But Park knows how he will describe himself: the dude in the Valley who’s working for the president. President Obama said in a statement, “Todd has been, and will continue to be, a key member of my administration.” Park will lead the effort to recruit top talent to help the federal government overhaul its IT. In a sense, he is doubling down on an initiative he’s already set well into motion: bringing a Silicon Valley sensibility to the public sector.It’s a continuation of what Park has already been doing for months. If you were at the surprisingly louche headquarters of the nonprofit Mozilla Foundation in Mountain View, California, one evening in June, you could have seen for yourself. Park was looking for recruits among the high-performing engineers of Silicon Valley, a group that generally ignores the government.

There were about a hundred of them, filling several lounges and conference rooms. As they waited, they nibbled on the free snacks and beverages from the open pantry; pizza would arrive later. Park, a middle-aged Asian American in a blue polo shirt approached a makeshift podium. Though he hates the spotlight, in events like these—where his passion for reforming the moribund state of government information technology flares—he has a surprising propensity for breathing fire.

“America needs you!” he said to the crowd. “Not a year from now! But Right. The. Fuck. Now!”

Indeed, America needs them, badly. Astonishing advances in computer technology and connectivity have dramatically transformed just about every aspect of society but government. Achievements that Internet companies seem to pull off effortlessly—innovative, easy-to-use services embraced by hundreds of millions of people—are tougher than Mars probes for federal agencies to execute. The recent history of government IT initiatives reads like a catalog of overspending, delays, and screwups. The Social Security Administration has spent six years and $300 million on a revamp of its disability-claim-filing process that still isn’t finished. The FBI took more than a decade to complete a case-filing system in 2012 at a cost of $670 million. And this summer a routine software update fried the State Department database used in processing visas; the fix took weeks, ruining travel plans for thousands.

Park knows the problem is systemic—a mindset that locks federal IT into obsolete practices—“a lot of people in government are, like, suspended in amber,” he said to the crowd at Mozilla. In the rest of the tech world, nimbleness, speed, risk-taking and relentless testing are second nature, essential to surviving in a competitive landscape that works to the benefit of consumers. But the federal government’s IT mentality is still rooted in caution, as if the digital transformation that has changed our lives is to be regarded with the utmost suspicion. It favors security over experimentation and adherence to bureaucratic procedure over agile problem-solving. That has led to an inherently sclerotic and corruptible system that doesn’t just hamper innovation, it leaves government IT permanently lagging, unable to perform even the most basic functions we expect. So it’s not at all surprising that the government has been unable to attract the world-class engineers who might be able to fix this mess, a fact that helps perpetuate a cycle of substandard services and poorly performing agencies that seems to confirm the canard that anything produced by government is prima facie lousy. “If we don’t get this right,” says Tom Freedman, coauthor of Future of Failure, a 61-page study on the subject for the Ford Foundation, “the future of governing effectively is in real question.”

No one believes this more deeply than Park, a Harvard-educated son of Korean immigrants. Mozilla board member and LinkedIn founder Reid Hoffman had secured the venue on short notice. (“I do what I can to help Todd,” Hoffman later explained. “We’re very fortunate to have him.”) Park, 41, founded two health IT companies—athenahealth and Castlight Health—and led them to successful IPOs before joining the Department of Health and Human Services in 2009 as CTO. In 2012, President Obama named him CTO of the entire US. Last fall, Park’s stress levels increased dramatically when he caught the hot-potato task of rebooting the disastrously dysfunctional HealthCare.gov website. But he was also given special emergency dispensation to ignore all the usual government IT procedures and strictures, permission that he used to pull together a so-called Ad Hoc team of Silicon Valley talent. The team ultimately rebooted the site and in the process provided a potential blueprint for reform. What if Park could duplicate this tech surge, creating similar squads of Silicon Valley types, parachuting them into bureaucracies to fix pressing tech problems? Could they actually clear the way for a golden era of gov-tech, where transformative apps were as likely to come from DC as they were from San Francisco or Mountain View, and people loved to use federal services as much as Googling and buying products on Amazon?

Park wants to move government IT into the open source, cloud-based, rapid-iteration environment that is second nature to the crowd considering his pitch tonight. The president has given reformers like him leave, he told them, “to bloweverything the fuck up and make it radically better.” This means taking on big-pocketed federal contractors, risk-averse bureaucrats, and politicians who may rail at overruns but thrive on contributions from those benefiting from the waste.

by Steven Levy, Wired | Read more:

Image: Michael George

André 3000 Is Moving On in Film, Music and Life

Since April, André 3000 has been on the road, traveling from festival to festival with his old partner Big Boi to celebrate the 20th anniversary of their debut album as Outkast. And on Sept. 26, he’ll star, under his original name, André Benjamin, as Jimi Hendrix in “Jimi: All Is by My Side,” a biopic about the year just before Hendrix’s breakthrough, when he moved to London, underwent a style transformation and squared off against Eric Clapton.

.jpg) Don’t let those things fool you. Over the eight years since the last Outkast project, the Prohibition-era film and album “Idlewild,” André 3000, now 39, has become, through some combination of happenstance and reluctance, one of the most reclusive figures in modern pop, verging on the chimerical.

Don’t let those things fool you. Over the eight years since the last Outkast project, the Prohibition-era film and album “Idlewild,” André 3000, now 39, has become, through some combination of happenstance and reluctance, one of the most reclusive figures in modern pop, verging on the chimerical.

Invisible but for his fingerprints, that is. For the better part of his career, André 3000 has been a pioneer, sometimes to his detriment. Outkast was a titan of Southern hip-hop when it was still being maligned by coastal rap purists. On the 2003 double album “Speakerboxxx/The Love Below,” which has been certified 11 times platinum, he effectively abandoned rapping altogether in favor of tender singing, long before melody had become hip-hop’s coin of the realm. His forays into fashion (Benjamin Bixby) and animated television (“Class of 3000”) would have made far more sense — and had a far bigger impact — a couple years down the line. In many ways, André 3000 anticipated the sound and shape of modern hip-hop ambition.

And yet here he is, on a quiet summer afternoon in his hometown, dressed in a hospital scrubs shirt, paint-splattered jeans and black wrestling shoes, talking for several hours before heading to the studio to work on a song he’s producing for Aretha Franklin’s coming album. In conversation, he’s open-eared, contemplative and un-self-conscious, a calm artist who betrays no doubt about the purity of his needs. And he’s a careful student of Hendrix, nailing his sing-songy accent (likening it to Snagglepuss) and even losing 20 pounds off his already slim frame for the part.

“I wanted André for the role, beyond the music, because of where he was psychologically — his curiosity about the world was a lot like Jimi,” said John Ridley, the film’s writer and director, who also wrote the screenplay for “12 Years a Slave.”

In the interview, excerpts from which are below, André 3000 spoke frankly about a tentative return to the spotlight that has at times been tumultuous — the loss of both of his parents, followed by early tour appearances that drew criticism and concern — as well as his bouts with self-doubt and his continuing attempts to redefine himself as an artist. “You do the world a better service,” he said, “by sticking to your guns.”

.jpg) Don’t let those things fool you. Over the eight years since the last Outkast project, the Prohibition-era film and album “Idlewild,” André 3000, now 39, has become, through some combination of happenstance and reluctance, one of the most reclusive figures in modern pop, verging on the chimerical.

Don’t let those things fool you. Over the eight years since the last Outkast project, the Prohibition-era film and album “Idlewild,” André 3000, now 39, has become, through some combination of happenstance and reluctance, one of the most reclusive figures in modern pop, verging on the chimerical.Invisible but for his fingerprints, that is. For the better part of his career, André 3000 has been a pioneer, sometimes to his detriment. Outkast was a titan of Southern hip-hop when it was still being maligned by coastal rap purists. On the 2003 double album “Speakerboxxx/The Love Below,” which has been certified 11 times platinum, he effectively abandoned rapping altogether in favor of tender singing, long before melody had become hip-hop’s coin of the realm. His forays into fashion (Benjamin Bixby) and animated television (“Class of 3000”) would have made far more sense — and had a far bigger impact — a couple years down the line. In many ways, André 3000 anticipated the sound and shape of modern hip-hop ambition.

And yet here he is, on a quiet summer afternoon in his hometown, dressed in a hospital scrubs shirt, paint-splattered jeans and black wrestling shoes, talking for several hours before heading to the studio to work on a song he’s producing for Aretha Franklin’s coming album. In conversation, he’s open-eared, contemplative and un-self-conscious, a calm artist who betrays no doubt about the purity of his needs. And he’s a careful student of Hendrix, nailing his sing-songy accent (likening it to Snagglepuss) and even losing 20 pounds off his already slim frame for the part.

“I wanted André for the role, beyond the music, because of where he was psychologically — his curiosity about the world was a lot like Jimi,” said John Ridley, the film’s writer and director, who also wrote the screenplay for “12 Years a Slave.”

In the interview, excerpts from which are below, André 3000 spoke frankly about a tentative return to the spotlight that has at times been tumultuous — the loss of both of his parents, followed by early tour appearances that drew criticism and concern — as well as his bouts with self-doubt and his continuing attempts to redefine himself as an artist. “You do the world a better service,” he said, “by sticking to your guns.”

by Jon Caramanica, NY Times | Read more:

Image: Patrick Redmond/XLrator MediaThe New Commute

Americans already spend an estimated 463 hours, or 19 days annually, inside their cars, 38 hours of which, on average (almost an entire work week), are spent either stalled or creeping along in traffic. Because congestion is now so prevalent, Americans factor up to 60 minutes of travel time for important trips (like to the airport) that might normally take only 20 minutes. All of this congestion is caused, to a significant degree, by a singular fact—that most commuters in the U.S. (76.4 percent) not only drive to work, but they drive to work alone. For people like Paul Minett, this number represents a spectacular inefficiency, all those empty and available passenger seats flying by on the highway like a vast river of opportunity. And so the next realm of transportation solutions is based on the idea that if we can’t build our way out of our traffic problems, we might be able to think our way out, devising technological solutions that try to fill those empty seats. A lot of that thinking, it turns out, has been happening in San Francisco. (...)

First launched in 2008, Avego’s ridesharing commuter app promised to extend the social media cloud in ways that could land you happily in someone’s unused passenger seat. Sean O’Sullivan, the company’s founder, has described their product as a cross between car-pooling, public transport, and eBay. By using the app, one could, within minutes, or perhaps a day in advance, find a ride with someone going from downtown San Francisco, say, to Sonoma County—with no more planning than it takes to update your Facebook page. Put this tool in the hands of many tens of thousands of users, so goes the vision, and add to it a platform for evaluating riders, a method of automated financial transactions, and a variety of incentives and rewards for participating in the scheme, and you could have something truly revolutionary on your hands.