Four gun-toting biologists scramble out of a helicopter on Southampton Island in northern Canada. Warily scanning the horizon for polar bears, they set off in hip waders across the tundra that stretches to the ice-choked coast of Hudson Bay.

Helicopter time runs at almost US$2,000 per hour, and the researchers have just 90 minutes on the ground to count shorebirds that have come to breed on the windswept barrens near the Arctic Circle. Travel is costly for the birds, too. Sandpipers, plovers and red knots have flown here from the tropics and far reaches of the Southern Hemisphere. They make these epic round-trip journeys each year, some flying farther than the distance to the Moon over the course of their lifetimes.

The birds cannot, however, outfly the threats along their path. Shorebird populations have shrunk, on average, by an estimated 70% across North America since 1973, and the species that breed in the Arctic are among the hardest hit1. The crashing numbers, seen in many shorebird populations around the world, have prompted wildlife agencies and scientists to warn that, without action, some species might go extinct.

The birds cannot, however, outfly the threats along their path. Shorebird populations have shrunk, on average, by an estimated 70% across North America since 1973, and the species that breed in the Arctic are among the hardest hit1. The crashing numbers, seen in many shorebird populations around the world, have prompted wildlife agencies and scientists to warn that, without action, some species might go extinct.

Although the trend is clear, the underlying causes are not. That’s because shorebirds travel thousands of kilometres a year, and encounter so many threats along the way that it is hard to decipher which are the most damaging. Evidence suggests that rapidly changing climate conditions in the Arctic are taking a toll, but that is just one of many offenders. Other culprits include coastal development, hunting in the Caribbean and agricultural shifts in North America. The challenge is to identify the most serious problems and then develop plans to help shorebirds to bounce back.

“It’s inherently complicated — these birds travel the globe, so it could be anything, anywhere, along the way,” says ecologist Paul Smith, a research scientist at Canada’s National Wildlife Research Centre in Ottawa who has come to Southampton Island to gather clues about the ominous declines. He heads a leading group assessing how shorebirds are coping with the powerful forces altering northern ecosystems. (...)

Shorebirds stream north on four main flyways in North America and Eurasia, and many species are in trouble. The State of North America’s Birds 2016 report1, released jointly by wildlife agencies in the United States, Canada and Mexico, charts the massive drop in shorebird populations over the past 40 years.

The East Asian–Australasian Flyway, where shorelines and wetlands have been hit hard by development, has even more threatened species. The spoon-billed sandpiper (Calidris pygmaea) is so “critically endangered” that there may be just a few hundred left, according to the International Union for Conservation of Nature.

Red knots are of major concern on several continents. The subspecies that breeds in the Canadian Arctic, the rufa red knot, has experienced a 75% decline in numbers since the 1980s, and is now listed as endangered in Canada. “The red knot gives me that uncomfortable feeling,” says Rausch, a shorebird biologist with the Canadian Wildlife Service in Yellowknife. She has yet to find a single rufa-red-knot nest, despite spending four summers surveying what has long been considered the bird’s prime breeding habitat.

The main problem for the rufa red knots is thought to lie more than 3,000 kilometres to the south. During their migration from South America, the birds stop to feed on energy-rich eggs laid by horseshoe crabs (Limulus polyphemus) in Delaware Bay (see ‘Tracking trouble in the Arctic’). Research suggests that the crabs have been so overharvested that the red knots have become deprived of much-needed fuel.

by Margaret Munro, Nature | Read more:

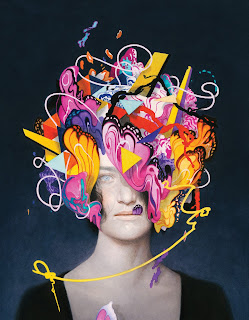

Image:Malkolm Boothroyd

Helicopter time runs at almost US$2,000 per hour, and the researchers have just 90 minutes on the ground to count shorebirds that have come to breed on the windswept barrens near the Arctic Circle. Travel is costly for the birds, too. Sandpipers, plovers and red knots have flown here from the tropics and far reaches of the Southern Hemisphere. They make these epic round-trip journeys each year, some flying farther than the distance to the Moon over the course of their lifetimes.

The birds cannot, however, outfly the threats along their path. Shorebird populations have shrunk, on average, by an estimated 70% across North America since 1973, and the species that breed in the Arctic are among the hardest hit1. The crashing numbers, seen in many shorebird populations around the world, have prompted wildlife agencies and scientists to warn that, without action, some species might go extinct.

The birds cannot, however, outfly the threats along their path. Shorebird populations have shrunk, on average, by an estimated 70% across North America since 1973, and the species that breed in the Arctic are among the hardest hit1. The crashing numbers, seen in many shorebird populations around the world, have prompted wildlife agencies and scientists to warn that, without action, some species might go extinct.Although the trend is clear, the underlying causes are not. That’s because shorebirds travel thousands of kilometres a year, and encounter so many threats along the way that it is hard to decipher which are the most damaging. Evidence suggests that rapidly changing climate conditions in the Arctic are taking a toll, but that is just one of many offenders. Other culprits include coastal development, hunting in the Caribbean and agricultural shifts in North America. The challenge is to identify the most serious problems and then develop plans to help shorebirds to bounce back.

“It’s inherently complicated — these birds travel the globe, so it could be anything, anywhere, along the way,” says ecologist Paul Smith, a research scientist at Canada’s National Wildlife Research Centre in Ottawa who has come to Southampton Island to gather clues about the ominous declines. He heads a leading group assessing how shorebirds are coping with the powerful forces altering northern ecosystems. (...)

Shorebirds stream north on four main flyways in North America and Eurasia, and many species are in trouble. The State of North America’s Birds 2016 report1, released jointly by wildlife agencies in the United States, Canada and Mexico, charts the massive drop in shorebird populations over the past 40 years.

The East Asian–Australasian Flyway, where shorelines and wetlands have been hit hard by development, has even more threatened species. The spoon-billed sandpiper (Calidris pygmaea) is so “critically endangered” that there may be just a few hundred left, according to the International Union for Conservation of Nature.

Red knots are of major concern on several continents. The subspecies that breeds in the Canadian Arctic, the rufa red knot, has experienced a 75% decline in numbers since the 1980s, and is now listed as endangered in Canada. “The red knot gives me that uncomfortable feeling,” says Rausch, a shorebird biologist with the Canadian Wildlife Service in Yellowknife. She has yet to find a single rufa-red-knot nest, despite spending four summers surveying what has long been considered the bird’s prime breeding habitat.

The main problem for the rufa red knots is thought to lie more than 3,000 kilometres to the south. During their migration from South America, the birds stop to feed on energy-rich eggs laid by horseshoe crabs (Limulus polyphemus) in Delaware Bay (see ‘Tracking trouble in the Arctic’). Research suggests that the crabs have been so overharvested that the red knots have become deprived of much-needed fuel.

by Margaret Munro, Nature | Read more:

Image:Malkolm Boothroyd