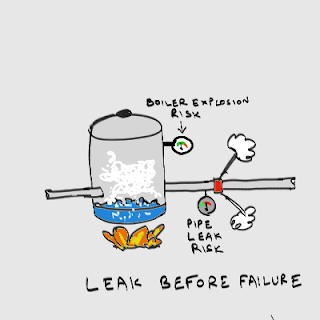

Leak before failure is a fascinating engineering principle, used in the design of things like nuclear power plants. The idea, loosely stated, is that things should fail in easily recoverable non-critical ways (such as leaks) before they fail in catastrophic ways (such as explosions or meltdowns). This means that various components and subsystems are designed with varying margins of safety, so that they fail at different times, under different conditions, in ways that help you prevent bigger disasters using smaller ones.

So for example, if pressure in a pipe gets too high, a valve should fail, and alert you to the fact that something is making pressure rise above the normal range, allowing you to figure it out and fix it before it gets so high that a boiler explosion scenario is triggered. Unlike canary-in-the-coalmine systems or fault monitoring/recovery systems, leak-before-failure systems have failure robustnesses designed organically into operating components, rather than being bolted on in the form of failure management systems.

Leak-before-failure is more than just a clever idea restricted to safety issues. Understood in suitably general terms, it provides an illuminating perspective on how companies scale.

Learning as Inefficiency

If you stop to think about it for a moment, leak-before-failure is a type of intrinsic inefficiency where monitoring and fault-detection systems are extrinsic overheads. A leak-before-failure design implies that some parts of the system are over-designed relative to others, with respect to the nominal operating envelope of the system. In a chain with a clear weakest link, the other links can be thought of as having been over-designed to varying degrees.

In the simplest case, leak-before-failure is like deliberately designing a chain with a calibrated amount of non-uniformity in the links, to control where the weakest link lies,. You can imagine, for instance, a chain with one link being structurally weaker than the rest, so it is the first to break under tensile stress (possibly in a way that decouples the two ends of the chain in a safe way as illustrated below).

Failure landscapes designed on the basis of leak-before-failure principles can sometimes do more than detect certain exceptional conditions. They might even prevent higher-risk scenarios by increasing the probability of lower-risk scenarios. One example is what is known as sacrificial protection: using a metal that oxidizes more easily to protect one that oxidizes less easily (magnesium is often used to protect steel pipes if I am remembering my undergrad metallurgy class right).

The opposite of leak-before-failure is another idea in engineering called design optimization, which is based on the exact opposite principle that all parts of a system should fail simultaneously. This is the equivalent of designing a chain with such extraordinarily high uniformity that at a certain stress level, all the links break at once (or what is roughly an equivalent thing, the probability distribution of link failure becomes a uniform distribution, with equal expectation that any link could be the first to break, based on invisible and unmodeled non-uniformities). (...)

Leak-before-failure can be understood, in critical-path terms, as moving the bottleneck and critical path to a locus that allows a system to be primed for a particular type of high-value learning. Instead of putting it where you maximize output, utilization, productivity, or any of the other classic “lean” measures of performance.

Or to put it another way, leak-before-failure is about figuring out where to put the fat. Or to put it yet another way, it’s about figuring out how to allocate the antifragility budget. Or to put it a third way, it’s about designing systems with unique snowflake building blocks. Or to put it a fourth way, it is to swap the sacred and profane values of industrial mass manufacturing. Or to put it a fifth way, it’s about designing for a bigger envelope of known and unknown contingencies around the nominal operating regime.

Or to put it in a sixth way, and my favorite way, it’s about designing for economies of variety. Learning in open-ended ways gets cheaper with increasing (and nominally unnecessary) diversity, variability and uniqueness in system design.

Note that you sometimes don’t need to explicitly know what kind of failure scenario you’re designing for. Introducing even random variations in non-critical components that have identical nominal designs is a way to get there (one example of this is the practice, in data centers, of having multiple generations of hardware, especially hard disks, in the architecture)

The fact that you can think of the core idea in so many different ways should tell you that there is no formula for leak-before-failure thinking: it is a kind of creative-play design game which I call fat thinking. To get to economies of scale and scope, you have to think lean. To get to economies of variety on the other hand, you have to think fat.

by Venkatesh Rao, Ribbonfarm | Read more:

Images: uncredited

Leak-before-failure is more than just a clever idea restricted to safety issues. Understood in suitably general terms, it provides an illuminating perspective on how companies scale.

Learning as Inefficiency

If you stop to think about it for a moment, leak-before-failure is a type of intrinsic inefficiency where monitoring and fault-detection systems are extrinsic overheads. A leak-before-failure design implies that some parts of the system are over-designed relative to others, with respect to the nominal operating envelope of the system. In a chain with a clear weakest link, the other links can be thought of as having been over-designed to varying degrees.

In the simplest case, leak-before-failure is like deliberately designing a chain with a calibrated amount of non-uniformity in the links, to control where the weakest link lies,. You can imagine, for instance, a chain with one link being structurally weaker than the rest, so it is the first to break under tensile stress (possibly in a way that decouples the two ends of the chain in a safe way as illustrated below).

Failure landscapes designed on the basis of leak-before-failure principles can sometimes do more than detect certain exceptional conditions. They might even prevent higher-risk scenarios by increasing the probability of lower-risk scenarios. One example is what is known as sacrificial protection: using a metal that oxidizes more easily to protect one that oxidizes less easily (magnesium is often used to protect steel pipes if I am remembering my undergrad metallurgy class right).

The opposite of leak-before-failure is another idea in engineering called design optimization, which is based on the exact opposite principle that all parts of a system should fail simultaneously. This is the equivalent of designing a chain with such extraordinarily high uniformity that at a certain stress level, all the links break at once (or what is roughly an equivalent thing, the probability distribution of link failure becomes a uniform distribution, with equal expectation that any link could be the first to break, based on invisible and unmodeled non-uniformities). (...)

Leak-before-failure can be understood, in critical-path terms, as moving the bottleneck and critical path to a locus that allows a system to be primed for a particular type of high-value learning. Instead of putting it where you maximize output, utilization, productivity, or any of the other classic “lean” measures of performance.

Or to put it another way, leak-before-failure is about figuring out where to put the fat. Or to put it yet another way, it’s about figuring out how to allocate the antifragility budget. Or to put it a third way, it’s about designing systems with unique snowflake building blocks. Or to put it a fourth way, it is to swap the sacred and profane values of industrial mass manufacturing. Or to put it a fifth way, it’s about designing for a bigger envelope of known and unknown contingencies around the nominal operating regime.

Or to put it in a sixth way, and my favorite way, it’s about designing for economies of variety. Learning in open-ended ways gets cheaper with increasing (and nominally unnecessary) diversity, variability and uniqueness in system design.

Note that you sometimes don’t need to explicitly know what kind of failure scenario you’re designing for. Introducing even random variations in non-critical components that have identical nominal designs is a way to get there (one example of this is the practice, in data centers, of having multiple generations of hardware, especially hard disks, in the architecture)

The fact that you can think of the core idea in so many different ways should tell you that there is no formula for leak-before-failure thinking: it is a kind of creative-play design game which I call fat thinking. To get to economies of scale and scope, you have to think lean. To get to economies of variety on the other hand, you have to think fat.

by Venkatesh Rao, Ribbonfarm | Read more:

Images: uncredited