Huang is a patient monopolist. He drafted the paperwork for Nvidia with two other people at a Denny’s restaurant in San Jose, California, in 1993, and has run it ever since. At sixty, he is sarcastic and self-deprecating, with a Teddy-bear face and wispy gray hair. Nvidia’s main product is its graphics-processing unit, a circuit board with a powerful microchip at its core. In the beginning, Nvidia sold these G.P.U.s to video gamers, but in 2006 Huang began marketing them to the supercomputing community as well. Then, in 2013, on the basis of promising research from the academic computer-science community, Huang bet Nvidia’s future on artificial intelligence. A.I. had disappointed investors for decades, and Bryan Catanzaro, Nvidia’s lead deep-learning researcher at the time, had doubts. “I didn’t want him to fall into the same trap that the A.I. industry has had in the past,” Catanzaro told me. “But, ten years plus down the road, he was right.”

In the near future, A.I. is projected to generate movies on demand, provide tutelage to children, and teach cars to drive themselves. All of these advances will occur on Nvidia G.P.U.s, and Huang’s stake in the company is now worth more than forty billion dollars.

In September, I met Huang for breakfast at the Denny’s where Nvidia was started. (The C.E.O. of Denny’s was giving him a plaque, and a TV crew was in attendance.) Huang keeps up a semi-comic deadpan patter at all times. Chatting with our waitress, he ordered seven items, including a Super Bird sandwich and a chicken-fried steak. “You know, I used to be a dishwasher here,” he told her. “But I worked hard! Like, really hard. So I got to be a busboy.”

Huang has a practical mind-set, dislikes speculation, and has never read a science-fiction novel. He reasons from first principles about what microchips can do today, then gambles with great conviction on what they will do tomorrow. “I do everything I can not to go out of business,” he said at breakfast. “I do everything I can not to fail.” Huang believes that the basic architecture of digital computing, little changed since it was introduced by I.B.M. in the early nineteen-sixties, is now being reconceptualized. “Deep learning is not an algorithm,” he said recently. “Deep learning is a method. It’s a new way of developing software.” The evening before our breakfast, I’d watched a video in which a robot, running this new kind of software, stared at its hands in seeming recognition, then sorted a collection of colored blocks. The video had given me chills; the obsolescence of my species seemed near. Huang, rolling a pancake around a sausage with his fingers, dismissed my concerns. “I know how it works, so there’s nothing there,” he said. “It’s no different than how microwaves work.” I pressed Huang—an autonomous robot surely presents risks that a microwave oven does not. He responded that he has never worried about the technology, not once. “All it’s doing is processing data,” he said. “There are so many other things to worry about.”

In May, hundreds of industry leaders endorsed a statement that equated the risk of runaway A.I. with that of nuclear war. Huang didn’t sign it. Some economists have observed that the Industrial Revolution led to a relative decline in the global population of horses, and have wondered if A.I. might do the same to humans. “Horses have limited career options,” Huang said. “For example, horses can’t type.” As he finished eating, I expressed my concerns that, someday soon, I would feed my notes from our conversation into an intelligence engine, then watch as it produced structured, superior prose. Huang didn’t dismiss this possibility, but he assured me that I had a few years before my John Henry moment. “It will come for the fiction writers first,” he said. Then he tipped the waitress a thousand dollars, and stood up to accept his award.

by Stephen Witt, New Yorker | Read more:

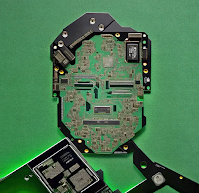

Image: Javier Jaén