Tuesday, August 31, 2021

Dialogues Between Neuroscience and Society: Music and the Brain With Pat Metheny

Tuesday, August 24, 2021

How Should an Influencer Sound?

For 10 straight years, the most popular way to introduce oneself on YouTube has been a single phrase: “Hey guys!” That’s pretty obvious to anyone who’s ever watched a YouTube video about, well, anything, but the company still put in the energy to actually track the data over the past decade. “What’s up” and “good morning” come in at second and third, but “hey guys” has consistently remained in the top spot. (Here is a fun supercut of a bunch of YouTubers saying it until the phrase ceases to mean anything at all.)

This is not where the sonic similarities of YouTubers, regardless of their content, end. For nearly as long as YouTube has existed, people have been lamenting the phenomenon of “YouTube voice,” or the slightly exaggerated, over-pronounced manner of speaking beloved by video essayists, drama commentators, and DIY experts on the platform. The ur-example cited is usually Hank Green, who’s been making videos on everything from the American health care system to the “Top 10 Freaking Amazing Explosions” since literally 2007.

Actual scholars have attempted to describe what YouTube voice sounds like. Speech pathologist and PhD candidate Erin Hall told Vice that there are two things happening here: “One is the actual segments they’re using — the vowels and consonants. They’re over-enunciating compared to casual speech, which is something newscasters or radio personalities do.” Second: “They’re trying to keep it more casual, even if what they’re saying is standard, adding a different kind of intonation makes it more engaging to listen to.”

In an investigation into YouTube voice, the Atlantic drew from several linguists to determine what specific qualities this manner of speaking shares. Essentially, it’s all about pronunciation and pacing. On camera, it takes more effort to keep someone interested (see also: exaggerated hand and facial motions). “Changing of pacing — that gets your attention,” said linguistics professor Naomi Baron. Baron noted that YouTubers tended to overstress both vowels and consonants, such as someone saying “exactly” like “eh-ckzACKTly.” She guesses that the style stems in part from informal news broadcast programs or “infotainment” like The Daily Show. Another, Mark Liberman of the University of Pennsylvania, put it bluntly: It’s “intellectual used-car-salesman voice,” he wrote. He also compared it to a carnival barker. (...)

Last week a TikTok came on my For You page that explored digital accents. TikToker @Averybrynn was referringspecifically to what she called the “beauty YouTuber dialect,” which she described as “like they weirdly pronounce everything just a little bit too much in these small little snippets?” What makes it distinct from regular YouTube voice is that each word tends to be longer than it should, while also being bookended by a staccato pause. There’s also a common inclination to turn short vowels into long vowels, like saying “thee” instead of “the.” Others in the comments pointed out the repeated use of the first person plural when referring to themselves (“and now we’re gonna go in with a swipe of mascara”), while one linguistics major noted that this was in fact a “sociolect,” not a dialect, because it refers to a social group.

It’s the sort of speech typically associated with female influencers who, by virtue of the job, we assume are there to endear themselves to the audience or present a trustworthy sales pitch. But what’s most interesting to watch about YouTube voice and the influencer accent is how normal people have adapted to it in their own, regular-person TikTok videos and Instagram stories. If you listen closely to enough people’s front-facing camera videos, you’ll hear a voice that sounds somewhere between a TED Talk and sponsored content, perhaps even within your own social circles. Is this a sign that as influencer culture bleeds into our everyday lives, many of the quirks that professionals use will become normal for us too? Maybe! Is it a sign that social platforms are turning us all into salespeople? Also maybe!

But here’s the question I haven’t heard anyone ask yet: If everyone finds these ways of speaking annoying to some degree, then how should people actually talk into the camera?

by Rebecca Jennings, Vox | Read more:

Image: YouTube/Anyone Can Play Guitar

[ed. Got the link for this article from one of my top three favorite online guitar instructors: Adrian Woodward, who normally sounds like a cross between Alfred Hitchcock and the Geico gecko. Here he tries a YouTube voice for fun. If you're a guitar player, do check out his instruction videos on YouTube at Anyone Can Play Guitar. High quality, and great dry personality.]

This is not where the sonic similarities of YouTubers, regardless of their content, end. For nearly as long as YouTube has existed, people have been lamenting the phenomenon of “YouTube voice,” or the slightly exaggerated, over-pronounced manner of speaking beloved by video essayists, drama commentators, and DIY experts on the platform. The ur-example cited is usually Hank Green, who’s been making videos on everything from the American health care system to the “Top 10 Freaking Amazing Explosions” since literally 2007.

Actual scholars have attempted to describe what YouTube voice sounds like. Speech pathologist and PhD candidate Erin Hall told Vice that there are two things happening here: “One is the actual segments they’re using — the vowels and consonants. They’re over-enunciating compared to casual speech, which is something newscasters or radio personalities do.” Second: “They’re trying to keep it more casual, even if what they’re saying is standard, adding a different kind of intonation makes it more engaging to listen to.”

In an investigation into YouTube voice, the Atlantic drew from several linguists to determine what specific qualities this manner of speaking shares. Essentially, it’s all about pronunciation and pacing. On camera, it takes more effort to keep someone interested (see also: exaggerated hand and facial motions). “Changing of pacing — that gets your attention,” said linguistics professor Naomi Baron. Baron noted that YouTubers tended to overstress both vowels and consonants, such as someone saying “exactly” like “eh-ckzACKTly.” She guesses that the style stems in part from informal news broadcast programs or “infotainment” like The Daily Show. Another, Mark Liberman of the University of Pennsylvania, put it bluntly: It’s “intellectual used-car-salesman voice,” he wrote. He also compared it to a carnival barker. (...)

Last week a TikTok came on my For You page that explored digital accents. TikToker @Averybrynn was referringspecifically to what she called the “beauty YouTuber dialect,” which she described as “like they weirdly pronounce everything just a little bit too much in these small little snippets?” What makes it distinct from regular YouTube voice is that each word tends to be longer than it should, while also being bookended by a staccato pause. There’s also a common inclination to turn short vowels into long vowels, like saying “thee” instead of “the.” Others in the comments pointed out the repeated use of the first person plural when referring to themselves (“and now we’re gonna go in with a swipe of mascara”), while one linguistics major noted that this was in fact a “sociolect,” not a dialect, because it refers to a social group.

It’s the sort of speech typically associated with female influencers who, by virtue of the job, we assume are there to endear themselves to the audience or present a trustworthy sales pitch. But what’s most interesting to watch about YouTube voice and the influencer accent is how normal people have adapted to it in their own, regular-person TikTok videos and Instagram stories. If you listen closely to enough people’s front-facing camera videos, you’ll hear a voice that sounds somewhere between a TED Talk and sponsored content, perhaps even within your own social circles. Is this a sign that as influencer culture bleeds into our everyday lives, many of the quirks that professionals use will become normal for us too? Maybe! Is it a sign that social platforms are turning us all into salespeople? Also maybe!

But here’s the question I haven’t heard anyone ask yet: If everyone finds these ways of speaking annoying to some degree, then how should people actually talk into the camera?

by Rebecca Jennings, Vox | Read more:

Image: YouTube/Anyone Can Play Guitar

[ed. Got the link for this article from one of my top three favorite online guitar instructors: Adrian Woodward, who normally sounds like a cross between Alfred Hitchcock and the Geico gecko. Here he tries a YouTube voice for fun. If you're a guitar player, do check out his instruction videos on YouTube at Anyone Can Play Guitar. High quality, and great dry personality.]

Friday, August 20, 2021

Tony Joe White - Behind the Music

[ed. Part 2 here. Been trying to learn some Tony Joe White guitar style lately. Great technique. If you're unfamiliar with him, or only know Rainy Night in Georgia and Polk Salad Annie, check out some of his other stuff on YouTube like this, this, this, and this whole album (Closer to the Truth). I love the story about Tina Turner recording a few of his songs. Starts at 30:25 - 34:30, and how he got into songwriting at 13:40 - 16:08.]

Thursday, August 5, 2021

American Gentry

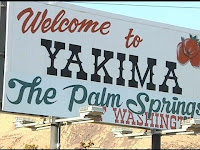

For the first eighteen years of my life, I lived in the very center of Washington state, in a city called Yakima. Shout-out to the self-proclaimed Palm Springs of Washington!

Yakima is a place I loved dearly and have returned to often over the years since, but I’ve never lived there again on a permanent basis. The same was true of most of my close classmates in high school: If they had left for college, most had never returned for longer than a few months at a time. Practically all of them now lived in major metro areas scattered across the country, not our hometown with its population of 90,000.

There were a lot of talented and interesting people in that group, most of whom I had more or less lost touch with over the intervening years. A few years ago, I had the idea of interviewing them to ask precisely why they haven’t come back and how they felt about it. That piece never really came together, but it was fascinating talking to a bunch of folks I hadn’t spoken to in years regarding what they thought and how they felt about home.

For the most part, their answers to my questions revolved around work. Few bore our hometown much, if any, ill will; they’d simply gone away to college, many had gone to graduate school after that, and the kinds of jobs they were now qualified for didn’t really exist in Yakima. Its economy revolved then, and revolves to an ever greater extent now, around commercial agriculture. There are other employers, but not much demand for highly educated professionals - which is generally what my high-achieving classmates became - relative to a larger city.

The careers they ended up pursuing, in corporate or management consulting, non-profits, finance, media, documentary filmmaking, and the like, exist to a much greater degree in major metropolitan areas. There are a few in Portland, and others in New York, Philadelphia, Los Angeles, Austin, and me in Phoenix. A great many of my former classmates live in Seattle. We were lucky that one of the country’s most booming major metros, with the most highly educated Millennial population in the country, is 140 miles away from where we grew up on the other side of the Cascade Mountains: close in absolute terms, but a world away culturally, economically, and politically.

Only a few have returned to Yakima permanently after their time away. Those who have seem to like it well enough; for a person lucky and accomplished enough to get one of those reasonably affluent professional jobs, Yakima - like most cities in the US - isn’t a bad place to live. The professional-managerial class, and the older Millennials in the process of joining it, has a pretty excellent material standard of living regardless of precisely where they’re at.

But very few of my classmates really belonged to the area’s elite. It wasn’t a city of international oligarchs, but one dominated by its wealthy, largely agricultural property-owning class. They mostly owned, and still own, fruit companies: apples, cherries, peaches, and now hops and wine-grapes. The other large-scale industries in the region, particularly commercial construction, revolve at a fundamental level around agriculture: They pave the roads on which fruits and vegetables are transported to transshipment points, build the warehouses where the produce is stored, and so on.

Commercial agriculture is a lucrative industry, at least for those who own the orchards, cold storage units, processing facilities, and the large businesses that cater to them. They have a trusted and reasonably well-paid cadre of managers and specialists in law, finance, and the like - members of the educated professional-managerial class that my close classmates and I have joined - but the vast majority of their employees are lower-wage laborers. The owners are mostly white; the laborers are mostly Latino, a significant portion of them undocumented immigrants. Ownership of the real, core assets is where the region’s wealth comes from, and it doesn’t extend down the social hierarchy. Yet this bounty is enough to produce hilltop mansions, a few high-end restaurants, and a staggering array of expensive vacation homes in Hawaii, Palm Springs, and the San Juan Islands.

This class of people exists all over the United States, not just in Yakima. So do mid-sized metropolitan areas, the places where huge numbers of Americans live but which don’t figure prominently in the country’s popular imagination or its political narratives: San Luis Obispo, California; Odessa, Texas; Bloomington, Illinois; Medford, Oregon; Hilo, Hawaii; Dothan, Alabama; Green Bay, Wisconsin. (As an aside, part of the reason I loved Parks and Recreation was because it accurately portrayed life in a place like this: a city that wasn’t small, which served as the hub for a dispersed rural area, but which wasn’t tightly connected to a major metropolitan area.)

This kind of elite’s wealth derives not from their salary - this is what separates them from even extremely prosperous members of the professional-managerial class, like doctors and lawyers - but from their ownership of assets. Those assets vary depending on where in the country we’re talking about; they could be a bunch of McDonald’s franchises in Jackson, Mississippi, a beef-processing plant in Lubbock, Texas, a construction company in Billings, Montana, commercial properties in Portland, Maine, or a car dealership in western North Carolina. Even the less prosperous parts of the United States generate enough surplus to produce a class of wealthy people. Depending on the political culture and institutions of a locality or region, this elite class might wield more or less political power. In some places, they have an effective stranglehold over what gets done; in others, they’re important but not all-powerful.

Wherever they live, their wealth and connections make them influential forces within local society. In the aggregate, through their political donations and positions within their localities and regions, they wield a great deal of political influence. They’re the local gentry of the United States.

(Yes, that’s a real sign. It’s one of the few things outsiders tend to remember about Yakima, along with excellent cheeseburgers from Miner’s and one of the nation’s worst COVID-19 outbreaks.)

Yakima is a place I loved dearly and have returned to often over the years since, but I’ve never lived there again on a permanent basis. The same was true of most of my close classmates in high school: If they had left for college, most had never returned for longer than a few months at a time. Practically all of them now lived in major metro areas scattered across the country, not our hometown with its population of 90,000.

There were a lot of talented and interesting people in that group, most of whom I had more or less lost touch with over the intervening years. A few years ago, I had the idea of interviewing them to ask precisely why they haven’t come back and how they felt about it. That piece never really came together, but it was fascinating talking to a bunch of folks I hadn’t spoken to in years regarding what they thought and how they felt about home.

For the most part, their answers to my questions revolved around work. Few bore our hometown much, if any, ill will; they’d simply gone away to college, many had gone to graduate school after that, and the kinds of jobs they were now qualified for didn’t really exist in Yakima. Its economy revolved then, and revolves to an ever greater extent now, around commercial agriculture. There are other employers, but not much demand for highly educated professionals - which is generally what my high-achieving classmates became - relative to a larger city.

The careers they ended up pursuing, in corporate or management consulting, non-profits, finance, media, documentary filmmaking, and the like, exist to a much greater degree in major metropolitan areas. There are a few in Portland, and others in New York, Philadelphia, Los Angeles, Austin, and me in Phoenix. A great many of my former classmates live in Seattle. We were lucky that one of the country’s most booming major metros, with the most highly educated Millennial population in the country, is 140 miles away from where we grew up on the other side of the Cascade Mountains: close in absolute terms, but a world away culturally, economically, and politically.

Only a few have returned to Yakima permanently after their time away. Those who have seem to like it well enough; for a person lucky and accomplished enough to get one of those reasonably affluent professional jobs, Yakima - like most cities in the US - isn’t a bad place to live. The professional-managerial class, and the older Millennials in the process of joining it, has a pretty excellent material standard of living regardless of precisely where they’re at.

But very few of my classmates really belonged to the area’s elite. It wasn’t a city of international oligarchs, but one dominated by its wealthy, largely agricultural property-owning class. They mostly owned, and still own, fruit companies: apples, cherries, peaches, and now hops and wine-grapes. The other large-scale industries in the region, particularly commercial construction, revolve at a fundamental level around agriculture: They pave the roads on which fruits and vegetables are transported to transshipment points, build the warehouses where the produce is stored, and so on.

Commercial agriculture is a lucrative industry, at least for those who own the orchards, cold storage units, processing facilities, and the large businesses that cater to them. They have a trusted and reasonably well-paid cadre of managers and specialists in law, finance, and the like - members of the educated professional-managerial class that my close classmates and I have joined - but the vast majority of their employees are lower-wage laborers. The owners are mostly white; the laborers are mostly Latino, a significant portion of them undocumented immigrants. Ownership of the real, core assets is where the region’s wealth comes from, and it doesn’t extend down the social hierarchy. Yet this bounty is enough to produce hilltop mansions, a few high-end restaurants, and a staggering array of expensive vacation homes in Hawaii, Palm Springs, and the San Juan Islands.

This class of people exists all over the United States, not just in Yakima. So do mid-sized metropolitan areas, the places where huge numbers of Americans live but which don’t figure prominently in the country’s popular imagination or its political narratives: San Luis Obispo, California; Odessa, Texas; Bloomington, Illinois; Medford, Oregon; Hilo, Hawaii; Dothan, Alabama; Green Bay, Wisconsin. (As an aside, part of the reason I loved Parks and Recreation was because it accurately portrayed life in a place like this: a city that wasn’t small, which served as the hub for a dispersed rural area, but which wasn’t tightly connected to a major metropolitan area.)

This kind of elite’s wealth derives not from their salary - this is what separates them from even extremely prosperous members of the professional-managerial class, like doctors and lawyers - but from their ownership of assets. Those assets vary depending on where in the country we’re talking about; they could be a bunch of McDonald’s franchises in Jackson, Mississippi, a beef-processing plant in Lubbock, Texas, a construction company in Billings, Montana, commercial properties in Portland, Maine, or a car dealership in western North Carolina. Even the less prosperous parts of the United States generate enough surplus to produce a class of wealthy people. Depending on the political culture and institutions of a locality or region, this elite class might wield more or less political power. In some places, they have an effective stranglehold over what gets done; in others, they’re important but not all-powerful.

Wherever they live, their wealth and connections make them influential forces within local society. In the aggregate, through their political donations and positions within their localities and regions, they wield a great deal of political influence. They’re the local gentry of the United States.

by Patrick Wyman, Perspectives: Past, Present, and Future | Read more:

Image: uncredited

[ed. I live 30 miles from Yakima, so this is of some interest.]

Tuesday, May 11, 2021

Is Capitalism Killing Conservatism?

The report Wednesday that U.S. birthrates fell to a record low in 2020 was expected but still grim. On Twitter the news was greeted, characteristically, by conservative laments and liberal comments implying that it’s mostly conservatism’s fault — because American capitalism allegedly makes parenthood unaffordable, work-life balance impossible and atomization inevitable.

This is a specific version of a long-standing argument about the tensions between traditionalism and capitalism, which seems especially relevant now that the right doesn’t know what it’s conserving anymore.

In a recent essay for New York Magazine, for instance, Eric Levitz argues that the social trends American conservatives most dislike — the rise of expressive individualism and the decline of religion, marriage and the family — are driven by socioeconomic forces the right’s free-market doctrines actively encourage. “America’s moral traditionalists are wedded to an economic system that is radically anti-traditional,” he writes, and “Republicans can neither wage war on capitalism nor make peace with its social implications.”

This argument is intuitively compelling. But the historical record is more complex. If the anti-traditional churn of capitalism inevitably doomed religious practice, communal associations or the institution of marriage, you would expect those things to simply decline with rapid growth and swift technological change. Imagine, basically, a Tocquevillian early America of sturdy families, thriving civic life and full-to-bursting pews giving way, through industrialization and suburbanization, to an ever-more-individualistic society.

But that’s not exactly what you see. Instead, as Lyman Stone points out in a recent report for the American Enterprise Institute (where I am a visiting fellow), the Tocquevillian utopia didn’t really yet exist when Alexis de Tocqueville was visiting America in the 1830s. Instead, the growth of American associational life largely happened during the Industrial Revolution. The rise of fraternal societies is a late-19th- and early-20th-century phenomenon. Membership in religious bodies rises across the hypercapitalist Gilded Age. The share of Americans who married before age 35 stayed remarkably stable from the 1890s till the 1960s, through booms and depressions and drastic economic change.

This suggests that social conservatism can be undermined by economic dynamism but also respond dynamically in its turn — through a constant “reinvention of tradition,” you might say, manifested in religious revival, new forms of association, new models of courtship, even as older forms pass away.

It’s only after the 1960s that this conservative reinvention seems to fail, with churches dividing, families failing, associational life dissolving. And capitalist values, the economic and sexual individualism of the neoliberal age, clearly play some role in this change.

But strikingly, after the 1960s, economic dynamism also diminishes as productivity growth drops and economic growth decelerates. So it can’t just be capitalist churn undoing conservatism, exactly, if economic stagnation and social decay go hand in hand.

One small example: Rates of geographic mobility in the United States, which you could interpret as a measure of how capitalism uproots people from their communities, have declined over the last few decades. But this hasn’t somehow preserved rural traditionalism. Quite the opposite: Instead of a rooted and religious heartland, you have more addiction, suicide and anomie.

Or a larger example: Western European nations do more to tame capitalism’s Darwinian side than America, with more regulation and family supports and welfare-state protections. Are their societies more fecund or religious? No, their economic stagnation and demographic decline have often been deeper than our own.

So it’s not that capitalist dynamism inevitably dissolves conservative habits. It’s more that the wealth this dynamism piles up, the liberty it enables and the technological distractions it invents let people live more individualistically — at first happily, with time perhaps less so — in ways that eventually undermine conservatism and dynamism together. At which point the peril isn’t markets red in tooth and claw, but a capitalist endgame that resembles Aldous Huxley’s “Brave New World,” with a rich and technologically proficient world turning sterile and dystopian.

This is a specific version of a long-standing argument about the tensions between traditionalism and capitalism, which seems especially relevant now that the right doesn’t know what it’s conserving anymore.

In a recent essay for New York Magazine, for instance, Eric Levitz argues that the social trends American conservatives most dislike — the rise of expressive individualism and the decline of religion, marriage and the family — are driven by socioeconomic forces the right’s free-market doctrines actively encourage. “America’s moral traditionalists are wedded to an economic system that is radically anti-traditional,” he writes, and “Republicans can neither wage war on capitalism nor make peace with its social implications.”

This argument is intuitively compelling. But the historical record is more complex. If the anti-traditional churn of capitalism inevitably doomed religious practice, communal associations or the institution of marriage, you would expect those things to simply decline with rapid growth and swift technological change. Imagine, basically, a Tocquevillian early America of sturdy families, thriving civic life and full-to-bursting pews giving way, through industrialization and suburbanization, to an ever-more-individualistic society.

But that’s not exactly what you see. Instead, as Lyman Stone points out in a recent report for the American Enterprise Institute (where I am a visiting fellow), the Tocquevillian utopia didn’t really yet exist when Alexis de Tocqueville was visiting America in the 1830s. Instead, the growth of American associational life largely happened during the Industrial Revolution. The rise of fraternal societies is a late-19th- and early-20th-century phenomenon. Membership in religious bodies rises across the hypercapitalist Gilded Age. The share of Americans who married before age 35 stayed remarkably stable from the 1890s till the 1960s, through booms and depressions and drastic economic change.

This suggests that social conservatism can be undermined by economic dynamism but also respond dynamically in its turn — through a constant “reinvention of tradition,” you might say, manifested in religious revival, new forms of association, new models of courtship, even as older forms pass away.

It’s only after the 1960s that this conservative reinvention seems to fail, with churches dividing, families failing, associational life dissolving. And capitalist values, the economic and sexual individualism of the neoliberal age, clearly play some role in this change.

But strikingly, after the 1960s, economic dynamism also diminishes as productivity growth drops and economic growth decelerates. So it can’t just be capitalist churn undoing conservatism, exactly, if economic stagnation and social decay go hand in hand.

One small example: Rates of geographic mobility in the United States, which you could interpret as a measure of how capitalism uproots people from their communities, have declined over the last few decades. But this hasn’t somehow preserved rural traditionalism. Quite the opposite: Instead of a rooted and religious heartland, you have more addiction, suicide and anomie.

Or a larger example: Western European nations do more to tame capitalism’s Darwinian side than America, with more regulation and family supports and welfare-state protections. Are their societies more fecund or religious? No, their economic stagnation and demographic decline have often been deeper than our own.

So it’s not that capitalist dynamism inevitably dissolves conservative habits. It’s more that the wealth this dynamism piles up, the liberty it enables and the technological distractions it invents let people live more individualistically — at first happily, with time perhaps less so — in ways that eventually undermine conservatism and dynamism together. At which point the peril isn’t markets red in tooth and claw, but a capitalist endgame that resembles Aldous Huxley’s “Brave New World,” with a rich and technologically proficient world turning sterile and dystopian.

by Ross Douthat, NY Times | Read more:

Image: Carlos Javier Ortiz/Redux

Monday, May 10, 2021

The Truth about Painkillers

In October 2003, the Orlando Sentinel published "OxyContin under Fire," a five-part series that profiled several "accidental addicts" — individuals who were treated for pain and wound up addicted to opioids. They "put their faith in their doctors and ended up dead, or broken" the Sentinel wrote of these victims. Among them were a 36-year-old computer-company executive from Tampa and a 39-year-old Kissimmee handyman and father of three — the latter of whom died of an overdose.

The Sentinel series helped set the template for what was to become the customary narrative for reporting on the opioid crisis. Social worker Brooke Feldman called attention to the prototype in 2017:

Indeed, four months after the original "OxyContin under Fire" story ran, the paper issued a correction: Both the handyman and the executive were heavily involved with drugs before their doctors ever prescribed OxyContin. Like Feldman, neither man was an accidental addict.

Yet one cannot overstate the media's continued devotion to the narrative, as Temple University journalism professor Jillian Bauer-Reese can attest. Soon after she created an online repository of opioid recovery stories, reporters began calling her, making very specific requests. "They were looking for people who had started on a prescription from a doctor or a dentist," she told the Columbia Journalism Review. "They had essentially identified a story that they wanted to tell and were looking for a character who could tell that story."

The story, of course, was the one about the accidental addict. But to what purpose?

Some reporters, no doubt, simply hoped to call attention to the opioid epidemic by showcasing sympathetic and relatable individuals — victims who started out as people like you and me. It wouldn't be surprising if drug users or their loved ones, aware that a victim-infused narrative would dilute the stigma that comes with addiction, had handed reporters a contrived plotline themselves.

Another theory — perhaps too cynical, perhaps not cynical enough — is that the accidental-addict trope was irresistible to journalists in an elite media generally unfriendly to Big Pharma. Predisposed to casting drug companies as the sole villain in the opioid epidemic, they seized on the story of the accidental addict as an object lesson in what happens when greedy companies push a product that is so supremely addictive, it can hook anyone it's prescribed to.

Whatever the media's motives, the narrative does not fit with what we've learned over two decades since the opioid crisis began. We know now that the vast majority of patients who take pain relievers like oxycodone and hydrocodone never get addicted. We also know that people who develop problems are very likely to have struggled with addiction, or to be suffering from psychological trouble, prior to receiving opioids. Furthermore, we know that individuals who regularly misuse pain relievers are far more likely to keep obtaining them from illicit sources rather than from their own doctors.

In short, although accidental addiction can happen, otherwise happy lives rarely come undone after a trip to the dental surgeon. And yet the exaggerated risk from prescription opioids — disseminated in the media but also advanced by some vocal physicians — led to an overzealous regime of pill control that has upended the lives of those suffering from real pain.

To be sure, some restrictions were warranted. Too many doctors had prescribed opioids far too liberally for far too long. But tackling the problem required a scalpel, not the machete that health authorities, lawmakers, health-care systems, and insurers ultimately wielded, barely distinguishing between patients who needed opioids for deliverance from disabling pain and those who sought pills for recreation or profit, or to maintain a drug habit.

The parable of the accidental addict has resulted in consequences that, though unintended, have been remarkably destructive. Fortunately, a peaceable co-existence between judicious pain treatment, the curbing of pill diversion, and the protection of vulnerable patients against abuse and addiction is possible, as long as policymakers, physicians, and other authorities are willing to take the necessary steps. (...)

Many physicians... began refusing to prescribe opioids and withdrawing patients from their stable opioid regimens around 2011 — approximately the same time as states launched their reform efforts. Reports of pharmacies declining to fill prescriptions — even for patients with terminal illness, cancer pain, or acute post-surgical pain — started surfacing. At that point, 10 million Americans were suffering "high impact pain," with four in five being unable to work and a third no longer able to perform basic self-care tasks such as washing themselves and getting dressed.

Their prospects grew even more tenuous with the release of the CDC's "Guideline for Prescribing Opioids for Chronic Pain" in 2016. The guideline, which was labeled non-binding, offered reasonable advice to primary-care doctors — for example, it recommended going slow when initiating doses and advised weighing the harms and benefits of opioids. It also imposed no cap on dosage, instead advising prescribers to "avoid increasing dosage to ≥90 MME per day." (An MME, or morphine milligram equivalent, is a basic measure of opioid potency relative to morphine: A 15 mg tablet of morphine equals 15 MMEs; 15 mg of oxycodone converts to about 25 mg morphine.)

Yet almost overnight, the CDC guideline became a new justification for dose control, with the 90 MME threshold taking on the power of an enforceable national standard. Policymakers, insurers, health-care systems, quality-assurance agencies, pharmacies, Department of Veterans Affairs medical centers, contractors for the U.S. Centers for Medicare and Medicaid Services, and state health authorities alike employed 90 MME as either a strict daily limit or a soft goal — the latter indicating that although exceptions were possible, they could be made only after much paperwork and delay.

As a result, prescribing fell even more sharply, in terms of both dosages per capita and numbers of prescriptions written. A 2019 Quest Diagnostics survey of 500 primary-care physicians found that over 80% were reluctant to accept patients who were taking prescription opioids, while a 2018 survey of 219 primary-care clinics in Michigan found that 41% of physicians would not prescribe opioids for patients who weren't already receiving them. Pain specialists, too, were cutting back: According to a 2019 survey conducted by the American Board of Pain Medicine, 72% said they or their patients had been required to reduce the quantity or dose of medication. In the words of Dr. Sean Mackey, director of Stanford University's pain-management program, "[t]here's almost a McCarthyism on this, that's silencing so many [health professionals] who are simply scared."

The Sentinel series helped set the template for what was to become the customary narrative for reporting on the opioid crisis. Social worker Brooke Feldman called attention to the prototype in 2017:

Hannah was a good kid....Straight A student....Bright future. If it weren't for her doctor irresponsibly prescribing painkillers for a soccer injury and those damn pharmaceutical companies getting rich off of it, she never would have wound up using heroin.Feldman, who has written and spoken openly about her own drug problem, knows firsthand of the deception embedded in the accidental-addict story. She received her first Percocet from a friend years after she'd been a serious consumer of marijuana, alcohol, benzodiazepines, PCP, and cocaine.

Indeed, four months after the original "OxyContin under Fire" story ran, the paper issued a correction: Both the handyman and the executive were heavily involved with drugs before their doctors ever prescribed OxyContin. Like Feldman, neither man was an accidental addict.

Yet one cannot overstate the media's continued devotion to the narrative, as Temple University journalism professor Jillian Bauer-Reese can attest. Soon after she created an online repository of opioid recovery stories, reporters began calling her, making very specific requests. "They were looking for people who had started on a prescription from a doctor or a dentist," she told the Columbia Journalism Review. "They had essentially identified a story that they wanted to tell and were looking for a character who could tell that story."

The story, of course, was the one about the accidental addict. But to what purpose?

Some reporters, no doubt, simply hoped to call attention to the opioid epidemic by showcasing sympathetic and relatable individuals — victims who started out as people like you and me. It wouldn't be surprising if drug users or their loved ones, aware that a victim-infused narrative would dilute the stigma that comes with addiction, had handed reporters a contrived plotline themselves.

Another theory — perhaps too cynical, perhaps not cynical enough — is that the accidental-addict trope was irresistible to journalists in an elite media generally unfriendly to Big Pharma. Predisposed to casting drug companies as the sole villain in the opioid epidemic, they seized on the story of the accidental addict as an object lesson in what happens when greedy companies push a product that is so supremely addictive, it can hook anyone it's prescribed to.

Whatever the media's motives, the narrative does not fit with what we've learned over two decades since the opioid crisis began. We know now that the vast majority of patients who take pain relievers like oxycodone and hydrocodone never get addicted. We also know that people who develop problems are very likely to have struggled with addiction, or to be suffering from psychological trouble, prior to receiving opioids. Furthermore, we know that individuals who regularly misuse pain relievers are far more likely to keep obtaining them from illicit sources rather than from their own doctors.

In short, although accidental addiction can happen, otherwise happy lives rarely come undone after a trip to the dental surgeon. And yet the exaggerated risk from prescription opioids — disseminated in the media but also advanced by some vocal physicians — led to an overzealous regime of pill control that has upended the lives of those suffering from real pain.

To be sure, some restrictions were warranted. Too many doctors had prescribed opioids far too liberally for far too long. But tackling the problem required a scalpel, not the machete that health authorities, lawmakers, health-care systems, and insurers ultimately wielded, barely distinguishing between patients who needed opioids for deliverance from disabling pain and those who sought pills for recreation or profit, or to maintain a drug habit.

The parable of the accidental addict has resulted in consequences that, though unintended, have been remarkably destructive. Fortunately, a peaceable co-existence between judicious pain treatment, the curbing of pill diversion, and the protection of vulnerable patients against abuse and addiction is possible, as long as policymakers, physicians, and other authorities are willing to take the necessary steps. (...)

Many physicians... began refusing to prescribe opioids and withdrawing patients from their stable opioid regimens around 2011 — approximately the same time as states launched their reform efforts. Reports of pharmacies declining to fill prescriptions — even for patients with terminal illness, cancer pain, or acute post-surgical pain — started surfacing. At that point, 10 million Americans were suffering "high impact pain," with four in five being unable to work and a third no longer able to perform basic self-care tasks such as washing themselves and getting dressed.

Their prospects grew even more tenuous with the release of the CDC's "Guideline for Prescribing Opioids for Chronic Pain" in 2016. The guideline, which was labeled non-binding, offered reasonable advice to primary-care doctors — for example, it recommended going slow when initiating doses and advised weighing the harms and benefits of opioids. It also imposed no cap on dosage, instead advising prescribers to "avoid increasing dosage to ≥90 MME per day." (An MME, or morphine milligram equivalent, is a basic measure of opioid potency relative to morphine: A 15 mg tablet of morphine equals 15 MMEs; 15 mg of oxycodone converts to about 25 mg morphine.)

Yet almost overnight, the CDC guideline became a new justification for dose control, with the 90 MME threshold taking on the power of an enforceable national standard. Policymakers, insurers, health-care systems, quality-assurance agencies, pharmacies, Department of Veterans Affairs medical centers, contractors for the U.S. Centers for Medicare and Medicaid Services, and state health authorities alike employed 90 MME as either a strict daily limit or a soft goal — the latter indicating that although exceptions were possible, they could be made only after much paperwork and delay.

As a result, prescribing fell even more sharply, in terms of both dosages per capita and numbers of prescriptions written. A 2019 Quest Diagnostics survey of 500 primary-care physicians found that over 80% were reluctant to accept patients who were taking prescription opioids, while a 2018 survey of 219 primary-care clinics in Michigan found that 41% of physicians would not prescribe opioids for patients who weren't already receiving them. Pain specialists, too, were cutting back: According to a 2019 survey conducted by the American Board of Pain Medicine, 72% said they or their patients had been required to reduce the quantity or dose of medication. In the words of Dr. Sean Mackey, director of Stanford University's pain-management program, "[t]here's almost a McCarthyism on this, that's silencing so many [health professionals] who are simply scared."

by Sally Satel, National Affairs | Read more:

Image: uncredited

[ed. Finally, a voice in the wilderness. Relatedly, I'd like to see a more nuanced discussion on the topics of dependency and addiction. The author (like everyone else) assumes anything addictive is unquestionably bad (especially if it's something that makes you feel good). If you need insulin, are you dependent and an addict? Of course, but who would deny insulin to a diabetic, and what's the difference? How might the world look if people had a steady and reliable supply of medications, for whatever reasons? Couldn't be much worse than it is now and might solve a lot of social problems. RIP Tom Petty and Prince.]

Why Do All Records Sound the Same?

When you turn on the radio, you might think music all sounds the same these days, then wonder if you’re just getting old. But you’re right, it does all sound the same. Every element of the recording process, from the first takes to the final tweaks, has been evolved with one simple aim: control. And that control often lies in the hands of a record company desperate to get their song on the radio. So they’ll encourage a controlled recording environment (slow, high-tech and using malleable digital effects).

Every finished track is then coated in a thick layer of audio polish before being market-tested and dispatched to a radio station, where further layers of polish are applied until the original recording is barely visible. That’s how you make a mainstream radio hit, and that’s what record labels want. (...)

When people talk about a shortage of ‘warm’ or ‘natural’ recording, they often blame digital technology. It’s a red herring, because copying a great recording onto CD or into an iPod doesn’t stop it sounding good. Even self-consciously old fashioned recordings like Arif Mardin’s work with Norah Jones was recorded on two inch tape, then copied into a computer for editing, then mixed through an analogue console back into the computer for mastering. It’s now rare to hear recently-produced audio which has never been through any analogue-digital conversion—although a vinyl White Stripes album might qualify.

Until surprisingly recently—maybe 2002—the majority of records were made the same way they’d been made since the early 70s: through vast, multi-channel recording consoles onto 24 or 48-track tape. At huge expense, you’d rent purpose-built rooms containing perhaps a million pounds’ worth of equipment, employing a producer, engineer and tape operator. Digital recording into a computer had been possible since the mid 90s, but major producers were often sceptical.

By 2000, Pro Tools, the industry-standard studio software, was mature and stable and sounded good. With a laptop and a small rack of gear costing maybe £25,000 you could record most of a major label album. So the business shifted from the console—the huge knob-covered desk in front of a pair of wardrobe-sized monitor speakers—to the computer screen. You weren’t looking at the band or listening to the music, you were staring at 128 channels of wiggling coloured lines.

“There’s no big equipment any more,” says John Leckie. “No racks of gear with flashing lights and big knobs. The reason I got into studio engineering was that it was the closest thing I could find to getting into a space ship. Now, it isn’t. It’s like going to an accountant. It changes the creative dynamic in the room when it’s just one guy sitting staring at a computer screen.”

“Before, you had a knob that said ‘Bass.’ You turned it up, said ‘Ah, that’s better’ and moved on. Now, you have to choose what frequency, and the slope, and how many dBs, and it all makes a difference. There’s a constant temptation to tamper.”

What makes working with Pro Tools really different from tape is that editing is absurdly easy. Most bands record to a click track, so the tempo is locked. If a guitarist plays a riff fifty times, it’s a trivial job to pick the best one and loop it for the duration of the verse.

“Musicians are inherently lazy,” says John. “If there’s an easier way of doing something than actually playing, they’ll do that.” A band might jam together for a bit, then spend hours or days choosing the best bits and pasting a track together. All music is adopting the methods of dance music, of arranging repetitive loops on a grid. With the structure of the song mapped out in coloured boxes on screen, there’s a huge temptation to fill in the gaps, add bits and generally clutter up the sound.(...)

Once the band and producer are finished, their multitrack—usually a hard disk containing Pro Tools files for maybe 128 channels of audio—is passed onto a mix engineer. L.A.-based JJ Puig has mixed records for Black Eyed Peas, U2, Snow Patrol, Green Day and Mary J Blige. His work is taken so seriously that he’s often paid royalties rather than a fixed fee. He works from Studio A at Ocean Way Studios on the Sunset Strip. The control room looks like a dimly-lit library. Instead of books, the floor-to-ceiling racks are filled with vintage audio gear. This is the room where Frank Sinatra recorded “It Was A Very Good Year” and Michael Jackson recorded “Beat It.”

And now, it belongs to JJ Puig. Record companies pay him to essentially re-produce the track, but without the artist and producer breathing down his neck. He told Sound On Sound magazine: “When I mixed The Rolling Stones’ A Bigger Bang album, I reckoned that one of the songs needed a tambourine and a shaker, so I put it on. If Glyn Johns [who produced Sticky Fingers] had done that many years ago, he’d have been shot in the head. Mick Jagger was kind of blown away by what I’d done, no-one had ever done it before on a Stones record, but he couldn’t deny that it was great and fixed the record.”

When a multitrack arrives, JJs assistant tidies it up, re-naming the tracks, putting them in the order he’s used to and colouring the vocal tracks pink. Then JJ goes through tweaking and polishing and trimming every sound that will appear on the record. Numerous companies produce plugins for Pro Tools which are digital emulations of the vintage rack gear that still fills Studio One. If he wants to run Fergie’s vocal through a 1973 Roland Space Echo and a 1968 Marshall stack, it takes a couple of clicks.

Some of these plugins have become notorious. Auto Tune, developed by former seismologist Andy Hildebrand, was released as a Pro Tools plugin in 1997. It automatically corrects out of tune vocals by locking them to the nearest note in a given key. The L1 Ultramaximizer, released in 1994 by the Israeli company Waves, launched the latest round of the loudness war. It’s a very simple looking plugin which neatly and relentlessly makes music sound a lot louder (a subject we’ll return to in a little while).

When JJ has tweaked and polished and trimmed and edited, his stereo mix is passed on to a mastering engineer, who prepares it for release. What happens to that stereo mix is an extraordinary marriage of art, science and commerce. The tools available are superficially simple—you can really only change the EQ or the volume. But the difference between a mastered and unmastered track is immediately obvious. Mastered recordings sound like real records. That is to say, they all sound a little bit alike.

by Tom Whitwell, Cuepoint | Read more:

Image: uncredited

Every finished track is then coated in a thick layer of audio polish before being market-tested and dispatched to a radio station, where further layers of polish are applied until the original recording is barely visible. That’s how you make a mainstream radio hit, and that’s what record labels want. (...)

When people talk about a shortage of ‘warm’ or ‘natural’ recording, they often blame digital technology. It’s a red herring, because copying a great recording onto CD or into an iPod doesn’t stop it sounding good. Even self-consciously old fashioned recordings like Arif Mardin’s work with Norah Jones was recorded on two inch tape, then copied into a computer for editing, then mixed through an analogue console back into the computer for mastering. It’s now rare to hear recently-produced audio which has never been through any analogue-digital conversion—although a vinyl White Stripes album might qualify.

Until surprisingly recently—maybe 2002—the majority of records were made the same way they’d been made since the early 70s: through vast, multi-channel recording consoles onto 24 or 48-track tape. At huge expense, you’d rent purpose-built rooms containing perhaps a million pounds’ worth of equipment, employing a producer, engineer and tape operator. Digital recording into a computer had been possible since the mid 90s, but major producers were often sceptical.

By 2000, Pro Tools, the industry-standard studio software, was mature and stable and sounded good. With a laptop and a small rack of gear costing maybe £25,000 you could record most of a major label album. So the business shifted from the console—the huge knob-covered desk in front of a pair of wardrobe-sized monitor speakers—to the computer screen. You weren’t looking at the band or listening to the music, you were staring at 128 channels of wiggling coloured lines.

“There’s no big equipment any more,” says John Leckie. “No racks of gear with flashing lights and big knobs. The reason I got into studio engineering was that it was the closest thing I could find to getting into a space ship. Now, it isn’t. It’s like going to an accountant. It changes the creative dynamic in the room when it’s just one guy sitting staring at a computer screen.”

“Before, you had a knob that said ‘Bass.’ You turned it up, said ‘Ah, that’s better’ and moved on. Now, you have to choose what frequency, and the slope, and how many dBs, and it all makes a difference. There’s a constant temptation to tamper.”

What makes working with Pro Tools really different from tape is that editing is absurdly easy. Most bands record to a click track, so the tempo is locked. If a guitarist plays a riff fifty times, it’s a trivial job to pick the best one and loop it for the duration of the verse.

“Musicians are inherently lazy,” says John. “If there’s an easier way of doing something than actually playing, they’ll do that.” A band might jam together for a bit, then spend hours or days choosing the best bits and pasting a track together. All music is adopting the methods of dance music, of arranging repetitive loops on a grid. With the structure of the song mapped out in coloured boxes on screen, there’s a huge temptation to fill in the gaps, add bits and generally clutter up the sound.(...)

Once the band and producer are finished, their multitrack—usually a hard disk containing Pro Tools files for maybe 128 channels of audio—is passed onto a mix engineer. L.A.-based JJ Puig has mixed records for Black Eyed Peas, U2, Snow Patrol, Green Day and Mary J Blige. His work is taken so seriously that he’s often paid royalties rather than a fixed fee. He works from Studio A at Ocean Way Studios on the Sunset Strip. The control room looks like a dimly-lit library. Instead of books, the floor-to-ceiling racks are filled with vintage audio gear. This is the room where Frank Sinatra recorded “It Was A Very Good Year” and Michael Jackson recorded “Beat It.”

And now, it belongs to JJ Puig. Record companies pay him to essentially re-produce the track, but without the artist and producer breathing down his neck. He told Sound On Sound magazine: “When I mixed The Rolling Stones’ A Bigger Bang album, I reckoned that one of the songs needed a tambourine and a shaker, so I put it on. If Glyn Johns [who produced Sticky Fingers] had done that many years ago, he’d have been shot in the head. Mick Jagger was kind of blown away by what I’d done, no-one had ever done it before on a Stones record, but he couldn’t deny that it was great and fixed the record.”

When a multitrack arrives, JJs assistant tidies it up, re-naming the tracks, putting them in the order he’s used to and colouring the vocal tracks pink. Then JJ goes through tweaking and polishing and trimming every sound that will appear on the record. Numerous companies produce plugins for Pro Tools which are digital emulations of the vintage rack gear that still fills Studio One. If he wants to run Fergie’s vocal through a 1973 Roland Space Echo and a 1968 Marshall stack, it takes a couple of clicks.

Some of these plugins have become notorious. Auto Tune, developed by former seismologist Andy Hildebrand, was released as a Pro Tools plugin in 1997. It automatically corrects out of tune vocals by locking them to the nearest note in a given key. The L1 Ultramaximizer, released in 1994 by the Israeli company Waves, launched the latest round of the loudness war. It’s a very simple looking plugin which neatly and relentlessly makes music sound a lot louder (a subject we’ll return to in a little while).

When JJ has tweaked and polished and trimmed and edited, his stereo mix is passed on to a mastering engineer, who prepares it for release. What happens to that stereo mix is an extraordinary marriage of art, science and commerce. The tools available are superficially simple—you can really only change the EQ or the volume. But the difference between a mastered and unmastered track is immediately obvious. Mastered recordings sound like real records. That is to say, they all sound a little bit alike.

Image: uncredited

Sunday, May 9, 2021

Saturday, May 8, 2021

Jack Nicklaus: Golf My Way

[ed. Can't believe it. Jack Nicklaus's Golf My Way on YouTube. One of the best golf instruction videos, ever.]

Why Stocks Soared While America Struggled

You would never know how terrible the past year has been for many Americans by looking at Wall Street, which has been going gangbusters since the early days of the pandemic.

“On the streets, there are chants of ‘Stop killing Black people!’ and ‘No justice, no peace!’ Meanwhile, behind a computer, one of the millions of new day traders buys a stock because the chart is quickly moving higher,” wrote Chris Brown, the founder and managing member of the Ohio-based hedge fund Aristides Capital in a letter to investors in June 2020. “The cognitive dissonance is overwhelming at times.”

The market was temporarily shaken in March 2020, as stocks plunged for about a month at the outset of the Covid-19 outbreak, but then something strange happened. Even as hundreds of thousands of lives were lost, millions of people were laid off and businesses shuttered, protests against police violence erupted across the nation in the wake of George Floyd’s murder, and the outgoing president refused to accept the outcome of the 2020 election — supposedly the market’s nightmare scenario — for weeks, the stock market soared. After the jobs report from April 2021 revealed a much shakier labor recovery might be on the horizon, major indexes hit new highs.

“On the streets, there are chants of ‘Stop killing Black people!’ and ‘No justice, no peace!’ Meanwhile, behind a computer, one of the millions of new day traders buys a stock because the chart is quickly moving higher,” wrote Chris Brown, the founder and managing member of the Ohio-based hedge fund Aristides Capital in a letter to investors in June 2020. “The cognitive dissonance is overwhelming at times.”

The market was temporarily shaken in March 2020, as stocks plunged for about a month at the outset of the Covid-19 outbreak, but then something strange happened. Even as hundreds of thousands of lives were lost, millions of people were laid off and businesses shuttered, protests against police violence erupted across the nation in the wake of George Floyd’s murder, and the outgoing president refused to accept the outcome of the 2020 election — supposedly the market’s nightmare scenario — for weeks, the stock market soared. After the jobs report from April 2021 revealed a much shakier labor recovery might be on the horizon, major indexes hit new highs.

The disconnect between Wall Street and Main Street, between corporate CEOs and the working class, has perhaps never felt so stark. How can it be that food banks are overwhelmed while the Dow Jones Industrial Average hits an all-time high? For a year that’s been so bad, it’s been hard not to wonder how the stock market could be so good.

To the extent that there can ever be an explanation for what’s going on with the stock market, there are some straightforward financial answers here. The Federal Reserve took extraordinary measures to support financial markets and reassure investors it wouldn’t let major corporations fall apart. Congress did its part as well, pumping trillions of dollars into the economy across multiple relief bills. Turns out giving people money is good for markets, too. Tech stocks, which make up a significant portion of the S&P 500, soared. And with bond yields so low, investors didn’t really have a more lucrative place to put their money.

To put it plainly, the stock market is not representative of the whole economy, much less American society. And what it is representative of did fine.

“No matter how many times we keep on saying the stock market is not the economy, people won’t believe it, but it isn’t,” said Paul Krugman, a Nobel Prize-winning economist and New York Times columnist. “The stock market is about one piece of the economy — corporate profits — and it’s not even about the current or near-future level of corporate profits, it’s about corporate profits over a somewhat longish horizon.”

Still, those explanations, to many people, don’t feel fair. Investors seem to have remained inconceivably optimistic throughout real turmoil and uncertainty. If the answer to why the stock market was fine is basically that’s how the system works, the follow-up question is: Should it?

“Talking about the prosperous nature of the stock market in the face of people still dying from Covid-19, still trying to get health care, struggling to get food, stay employed, it’s an affront to people’s actual lived experience,” said Solana Rice, the co-founder and co-executive director of Liberation in a Generation, which pushes for economic policies that reduce racial disparities. “The stock market is not representative of the makeup of this country.”

Inequality is not a new theme in the American economy. But the pandemic exposed and reinforced the way the wealthy and powerful experience what’s happening so much differently than those with less power and fewer means — and force the question of how the prosperity of those at the top could be better shared with those at the bottom. There are certainly ideas out there, though Wall Street might not like them.

by Emily Stewart, Vox | Read more:

Image: Vox

To the extent that there can ever be an explanation for what’s going on with the stock market, there are some straightforward financial answers here. The Federal Reserve took extraordinary measures to support financial markets and reassure investors it wouldn’t let major corporations fall apart. Congress did its part as well, pumping trillions of dollars into the economy across multiple relief bills. Turns out giving people money is good for markets, too. Tech stocks, which make up a significant portion of the S&P 500, soared. And with bond yields so low, investors didn’t really have a more lucrative place to put their money.

To put it plainly, the stock market is not representative of the whole economy, much less American society. And what it is representative of did fine.

“No matter how many times we keep on saying the stock market is not the economy, people won’t believe it, but it isn’t,” said Paul Krugman, a Nobel Prize-winning economist and New York Times columnist. “The stock market is about one piece of the economy — corporate profits — and it’s not even about the current or near-future level of corporate profits, it’s about corporate profits over a somewhat longish horizon.”

Still, those explanations, to many people, don’t feel fair. Investors seem to have remained inconceivably optimistic throughout real turmoil and uncertainty. If the answer to why the stock market was fine is basically that’s how the system works, the follow-up question is: Should it?

“Talking about the prosperous nature of the stock market in the face of people still dying from Covid-19, still trying to get health care, struggling to get food, stay employed, it’s an affront to people’s actual lived experience,” said Solana Rice, the co-founder and co-executive director of Liberation in a Generation, which pushes for economic policies that reduce racial disparities. “The stock market is not representative of the makeup of this country.”

Inequality is not a new theme in the American economy. But the pandemic exposed and reinforced the way the wealthy and powerful experience what’s happening so much differently than those with less power and fewer means — and force the question of how the prosperity of those at the top could be better shared with those at the bottom. There are certainly ideas out there, though Wall Street might not like them.

by Emily Stewart, Vox | Read more:

Image: Vox

[ed. See also: Counting the Chickens Twice; and, Always a Reckoning (Hussman Funds).]

Friday, May 7, 2021

Liberal Democratic Party (Japan)

The Liberal Democratic Party of Japan (自由民主党, Jiyū-Minshutō), frequently abbreviated to LDP or Jimintō (自民党), is a conservative political party in Japan.

The LDP has almost continuously been in power since its foundation in 1955—a period called the 1955 System—with the exception of a period between 1993 and 1994, and again from 2009 to 2012. In the 2012 election it regained control of the government. It holds 285 seats in the lower house and 113 seats in the upper house, and in coalition with the Komeito since 1999, the governing coalition has a supermajority in both houses. Prime Minister Yoshihide Suga, former Prime Minister Shinzo Abe and many present and former LDP ministers are also known members of Nippon Kaigi, an ultranationalist and monarchist organization.

The LDP is not to be confused with the now-defunct Democratic Party of Japan (民主党, Minshutō), the main opposition party from 1998 to 2016, or the Democratic Party (民進党, Minshintō), the main opposition party from 2016 to 2017.[16] The LDP is also not to be confused with the 1998-2003 Liberal Party (自由党, Jiyūtō) or the 2016-2019 Liberal Party (自由党, Jiyū-tō). (...)

Beginnings

The LDP was formed in 1955 as a merger between two of Japan's political parties, the Liberal Party (自由党, Jiyutō, 1945–1955, led by Shigeru Yoshida) and the Japan Democratic Party (日本民主党, Nihon Minshutō, 1954–1955, led by Ichirō Hatoyama), both right-wing conservative parties, as a united front against the then popular Japan Socialist Party (日本社会党, Nipponshakaitō), now Social Democratic Party (社会民主党, Shakaiminshutō). The party won the following elections, and Japan's first conservative government with a majority was formed by 1955. It would hold majority government until 1993.

The LDP began with reforming Japan's international relations, ranging from entry into the United Nations, to establishing diplomatic ties with the Soviet Union. Its leaders in the 1950s also made the LDP the main government party, and in all the elections of the 1950s, the LDP won the majority vote, with the only other opposition coming from left-wing politics, made up of the Japan Socialist Party and the Japanese Communist Party.

Ideology

The LDP has not espoused a well-defined, unified ideology or political philosophy, due to its long-term government, and has been described as a "catch-all" party. Its members hold a variety of positions that could be broadly defined as being to the right of the opposition parties. The LDP is usually associated with conservatism and Japanese nationalism. The LDP traditionally identified itself with a number of general goals: rapid, export-based economic growth; close cooperation with the United States in foreign and defense policies; and several newer issues, such as administrative reform. Administrative reform encompassed several themes: simplification and streamlining of government bureaucracy; privatization of state-owned enterprises; and adoption of measures, including tax reform, in preparation for the expected strain on the economy posed by an aging society. Other priorities in the early 1990s included the promotion of a more active and positive role for Japan in the rapidly developing Asia-Pacific region, the internationalization of Japan's economy by the liberalization and promotion of domestic demand (expected to lead to the creation of a high-technology information society) and the promotion of scientific research. A business-inspired commitment to free enterprise was tempered by the insistence of important small business and agricultural constituencies on some form of protectionism and subsidies. In addition, the LDP opposes the legalization of same-sex marriage.

The LDP has almost continuously been in power since its foundation in 1955—a period called the 1955 System—with the exception of a period between 1993 and 1994, and again from 2009 to 2012. In the 2012 election it regained control of the government. It holds 285 seats in the lower house and 113 seats in the upper house, and in coalition with the Komeito since 1999, the governing coalition has a supermajority in both houses. Prime Minister Yoshihide Suga, former Prime Minister Shinzo Abe and many present and former LDP ministers are also known members of Nippon Kaigi, an ultranationalist and monarchist organization.

The LDP is not to be confused with the now-defunct Democratic Party of Japan (民主党, Minshutō), the main opposition party from 1998 to 2016, or the Democratic Party (民進党, Minshintō), the main opposition party from 2016 to 2017.[16] The LDP is also not to be confused with the 1998-2003 Liberal Party (自由党, Jiyūtō) or the 2016-2019 Liberal Party (自由党, Jiyū-tō). (...)

Beginnings

The LDP was formed in 1955 as a merger between two of Japan's political parties, the Liberal Party (自由党, Jiyutō, 1945–1955, led by Shigeru Yoshida) and the Japan Democratic Party (日本民主党, Nihon Minshutō, 1954–1955, led by Ichirō Hatoyama), both right-wing conservative parties, as a united front against the then popular Japan Socialist Party (日本社会党, Nipponshakaitō), now Social Democratic Party (社会民主党, Shakaiminshutō). The party won the following elections, and Japan's first conservative government with a majority was formed by 1955. It would hold majority government until 1993.

The LDP began with reforming Japan's international relations, ranging from entry into the United Nations, to establishing diplomatic ties with the Soviet Union. Its leaders in the 1950s also made the LDP the main government party, and in all the elections of the 1950s, the LDP won the majority vote, with the only other opposition coming from left-wing politics, made up of the Japan Socialist Party and the Japanese Communist Party.

Ideology

The LDP has not espoused a well-defined, unified ideology or political philosophy, due to its long-term government, and has been described as a "catch-all" party. Its members hold a variety of positions that could be broadly defined as being to the right of the opposition parties. The LDP is usually associated with conservatism and Japanese nationalism. The LDP traditionally identified itself with a number of general goals: rapid, export-based economic growth; close cooperation with the United States in foreign and defense policies; and several newer issues, such as administrative reform. Administrative reform encompassed several themes: simplification and streamlining of government bureaucracy; privatization of state-owned enterprises; and adoption of measures, including tax reform, in preparation for the expected strain on the economy posed by an aging society. Other priorities in the early 1990s included the promotion of a more active and positive role for Japan in the rapidly developing Asia-Pacific region, the internationalization of Japan's economy by the liberalization and promotion of domestic demand (expected to lead to the creation of a high-technology information society) and the promotion of scientific research. A business-inspired commitment to free enterprise was tempered by the insistence of important small business and agricultural constituencies on some form of protectionism and subsidies. In addition, the LDP opposes the legalization of same-sex marriage.

by Wikipedia | Read more:

Image: Wikipedia

[ed. When countries are run like corporations (the LDP has been in power most of my life, how could I not have known this?). See also: the 1955 System link; and Did Japan’s Prime Minister Abe Serve Obama Beefsteak-Flavored Revenge for US Trade Representative Froman’s TPP Rudeness? (Naked Capitalism).]

Thursday, May 6, 2021

Three Club Challenge

You probably have a club in your bag that you love. One that’s as reliable as Congress is dysfunctional. For Kevin Costner in Tin Cup it was his trusty seven iron. For someone like Henrik Stenson, probably his three wood. For me, it’s my eight iron. There are certain clubs, either through experience, ability or default just seem to stand out.

But then there are those clubs that just give us the willies. For example, unlike Henrik I’d put my three wood in that category. I’m convinced no amount of practice will ever make me better with that club. I invariably chunk it or thin it, rarely hitting it straight, but I keep carrying it around because I’m convinced I might need to hit a 165 yard blooper on a 210 yard approach. A friend of mine has problems with his driver. He carries it around off and on for weeks and never uses it because “it’s just not working”. Little wonder.

If you’ve been golfing for a while you’ve probably indulged in the ‘what if’ question. I’m not talking about the misery stories you hear in the clubhouse after a round, those tears-in-the-beers laments of ‘what if I’d only laid up instead of trying to cut that corner’, or, ‘what if I hadn’t bladed that bunker shot into the lake’? Bad decisions and bad breaks. Conversations like those will go on as long as golf exists and really aren’t all that interesting (except, perhaps, for the person drowning their sorrows).

What I’m talking about is a more existential question. One that goes to the heart of every golfer’s game: What if you only had three clubs to play with, which ones would you choose? And why?

It’s a fun thought experiment because it makes you think about your abilities in a more distilled perspective: how well do I hit my clubs and what’s the best combination to use to get around a course in the lowest possible score?

Maybe you’ve had the chance to compete in a three-club tournament. They’re out there. Once in a while someone puts one together and they sound like a lot of fun. I’ve never had the opportunity to play in one myself, but recently did get the chance to try my own three club experiment with some surprising results.

Caveat: I’m not here to suggest that there’s one right mix of clubs for everyone, but I will say that it’s possible to shoot par golf (or better) with only three golf clubs.

First, some background. I’m an old guy, a senior golfer that’s been playing the game for nearly 25 years. High single to low double digit handicap (I’m guessing since I don’t keep a handicap). Usually shoot in the low to mid-80s with an occasional excursion into the high 70s.

Lately I’ve been playing on a nice nine hole course that rarely sees more than a dozen golfers at any time, even on the weekends. It’s not an executive course or a goat-track. In fact it’s as challenging a course as any muni, if not more so, and definitely in better condition. The greens keeping staff keep it in excellent shape and share resources with a nearby Nicklaus-designed course. It’s your average really nice nine hole course, and would command premium prices if expanded to 18 holes.

Anyway, because there’s hardly anyone around I usually play three balls, mainly for exercise and practice. I’ve always carried my bag, so it’s easy to drive up, unload my stuff, stick three balls in my pocket and take off.

A while back we had some strong winds. Stiff, persistent winds. I don’t mind playing in wind, but these were strong enough that my stand bag kept falling over when I set it down, and twisting around my body, throwing me off balance and making it hard to walk. I must have looked a bit like a drunk staggering up the fairways (not an uncommon sight on some of the courses I’ve played).

So I decided to dump the bag and play with three clubs.

But which ones? Keep in mind that everyone is different, so the clubs I selected are the ones I thought would work best for me.

To begin with, I realized that two are already taken. First, I’d need a putter. According to Golf Digest and Game Golf, you need a putter roughly 41 percent of the time on average. I don’t know about you, but I’m not going to try putting with a driver, three wood, or hybrid no matter how utilitarian they might be. It just feels too awkward. Perhaps it’s just personal preference, and if that’s not a big deal with you go for it.

The next club I selected was something that could get me close from a 120 yards out, help around the fringe, and get me out of a bunker. No brainer: sand wedge. I thought about a lob wedge but it didn’t have the distance, and a gap or pitching wedge was just too tough out of the sand and didn’t have enough loft for short flops to tight pins.

Finally, the last club in my arsenal. Six iron. Why the six? A number of reasons. First, and probably most important: I'm terrible with my six iron. Not as bad as my three wood, but for some reason the six has always given me problems. Maybe it's because I’ve never been fitted for clubs and it always stood out as being more difficult than most of the others. I don’t know why, really. In any case, I thought “why not get a little more practice and see if I can get this guy under control”? It also has the distance. When I hit it well I can get it maybe roughly 170 yards. Maybe. So that completed the set. My new streamlined self was ready for the wind.